The Mystery of the Second Law

Entropy increases. Mechanical work irreversibly turns into heat. The Second Law of thermodynamics is considered one of the great general principles of physical science. But 150 years after it was first introduced, there’s still something deeply mysterious about the Second Law. It almost seems like it’s going to be “provably true”. But one never quite gets there; it always seems to need something extra. Sometimes textbooks will gloss over everything; sometimes they’ll give some kind of “common-sense-but-outside-of-physics argument”. But the mystery of the Second Law has never gone away.

Why does the Second Law work? And does it even in fact always work, or is it actually sometimes violated? What does it really depend on? What would be needed to “prove it”?

For me personally the quest to understand the Second Law has been no less than a 50-year story. But back in the 1980s, as I began to explore the computational universe of simple programs, I discovered a fundamental phenomenon that was immediately reminiscent of the Second Law. And in the 1990s I started to map out just how this phenomenon might finally be able to demystify the Second Law. But it is only now—with ideas that have emerged from our Physics Project—that I think I can pull all the pieces together and finally be able to construct a proper framework to explain why—and to what extent—the Second Law is true.

In its usual conception, the Second Law is a law of thermodynamics, concerned with the dynamics of heat. But it turns out that there’s a vast generalization of it that’s possible. And in fact my key realization is that the Second Law is ultimately just a manifestation of the very same core computational phenomenon that is at the heart of our Physics Project and indeed the whole conception of science that is emerging from our study of the ruliad and the multicomputational paradigm.

It’s all a story of the interplay between underlying computational irreducibility and our nature as computationally bounded observers. Other observers—or even our own future technology—might see things differently. But at least for us now the ubiquity of computational irreducibility leads inexorably to the generation of behavior that we—with our computationally bounded nature—will read as “random”. We might start from something highly ordered (like gas molecules all in the corner of a box) but soon—at least as far as we’re concerned—it will typically seem to “randomize”, just as the Second Law implies.

In the twentieth century there emerged three great physical theories: general relativity, quantum mechanics and statistical mechanics, with the Second Law being the defining phenomenon of statistical mechanics. But while there was a sense that statistical mechanics (and in particular the Second Law) should somehow be “formally derivable”, general relativity and quantum mechanics seemed quite different. But our Physics Project has changed that picture. And the remarkable thing is that it now seems as if all three of general relativity, quantum mechanics and statistical mechanics are actually derivable, and from the same ultimate foundation: the interplay between computational irreducibility and the computational boundedness of observers like us.

The case of statistical mechanics and the Second Law is in some ways simpler than the other two because in statistical mechanics it’s realistic to separate the observer from the system they’re observing, while in general relativity and quantum mechanics it’s essential that the observer be an integral part of the system. It also helps that phenomena about things like molecules in statistical mechanics are much more familiar to us today than those about atoms of space or branches of multiway systems. And by studying the Second Law we’ll be able to develop intuition that we can use elsewhere, say in discussing “molecular” vs. “fluid” levels of description in my recent exploration of the physicalization of the foundations of metamathematics.

The Core Phenomenon of the Second Law

The earliest statements of the Second Law were things like: “Heat doesn’t flow from a colder body to a hotter one” or “You can’t systematically purely convert heat to mechanical work”. Later on there came the somewhat more abstract statement “Entropy tends to increase”. But in the end, all these statements boil down to the same idea: that somehow things always tend to get progressively “more random”. What may start in an orderly state will—according to the Second Law—inexorably “degrade” to a “randomized” state.

But how general is this phenomenon? Does it just apply to heat and temperature and molecules and things? Or is it something that applies across a whole range of kinds of systems?

The answer, I believe, is that underneath the Second Law there’s a very general phenomenon that’s extremely robust. And that has the potential to apply to pretty much any kind of system one can imagine.

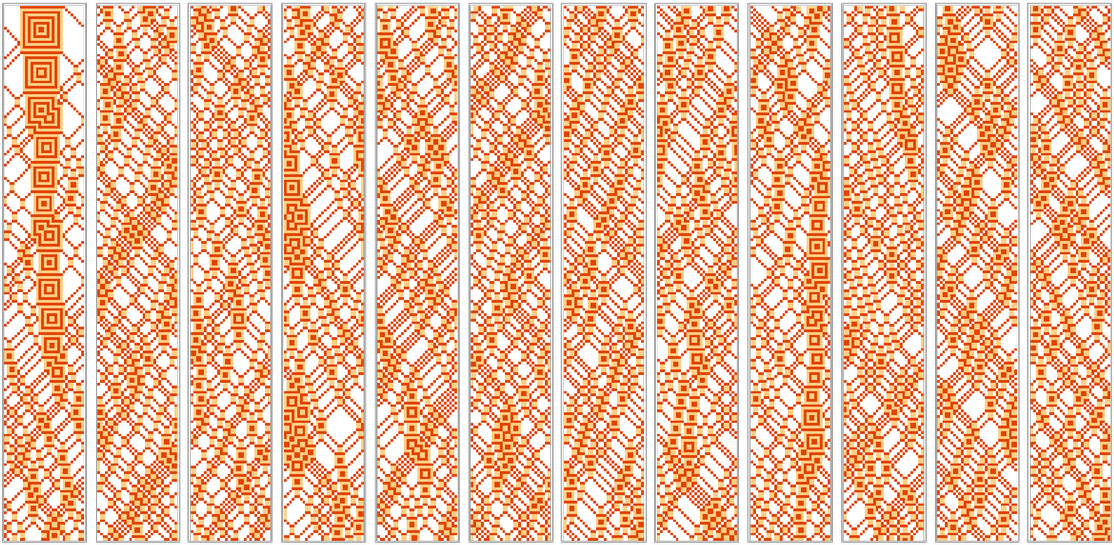

Here’s a longtime favorite example of mine: the rule 30 cellular automaton:

Start from a simple “orderly” state, here containing just a single non-white cell. Then apply the rule over and over again. The pattern that emerges has some definite, visible structure. But many aspects of it “seem random”. Just as in the Second Law, even starting from something “orderly”, one ends up getting something “random”.

But is it “really random”? It’s completely determined by the initial condition and rule, and you can always recompute it. But the subtle yet critical point is that if you’re just given the output, it can still “seem random” in the sense that no known methods operating purely on this output can find regularities in it.

It’s reminiscent of the situation with something like the digits of π. There’s a fairly simple algorithm for generating these digits. Yet once generated, the digits on their own seem for practical purposes random.

In studying physical systems there’s a long history of assuming that whenever randomness is seen, it somehow comes from outside the system. Maybe it’s the effect of “thermal noise” or “perturbations” acting on the system. Maybe it’s chaos-theory-style “excavation” of higher-order digits supplied through real-number initial conditions. But the surprising discovery I made in the 1980s by looking at things like rule 30 is that actually no such “external source” is needed: instead, it’s perfectly possible for randomness to be generated intrinsically within a system just through the process of applying definite underlying rules.

How can one understand this? The key is to think in computational terms. And ultimately the source of the phenomenon is the interplay between the computational process associated with the actual evolution of the system and the computational processes that our perception of the output of that evolution brings to bear.

We might have thought if a system had a simple underlying rule—like rule 30—then it’d always be straightforward to predict what the system will do. Of course, we could in principle always just run the rule and see what happens. But the question is whether we can expect to “jump ahead” and “find the outcome”, with much less computational effort than the actual evolution of the system involves.

And an important conclusion of a lot of science I did in the 1980s and 1990s is that for many systems—presumably including rule 30—it’s simply not possible to “jump ahead”. And instead the evolution of the system is what I call computationally irreducible—so that it takes an irreducible amount of computational effort to find out what the system does.

Ultimately this is a consequence of what I call the Principle of Computational Equivalence, which states that above some low threshold, systems always end up being equivalent in the sophistication of the computations they perform. And this is why even our brains and our most sophisticated methods of scientific analysis can’t “computationally outrun” even something like rule 30, so that we must consider it computationally irreducible.

So how does this relate to the Second Law? It’s what makes it possible for a system like rule 30 to operate according to a simple underlying rule, yet to intrinsically generate what seems like random behavior. If we could do all the necessary computationally irreducible work then we could in principle “see through” to the simple rules underneath. But the key point (emphasized by our Physics Project) is that observers like us are computationally bounded in our capabilities. And this means that we’re not able to “see through the computational irreducibility”—with the result that the behavior we see “looks random to us”.

And in thermodynamics that “random-looking” behavior is what we associate with heat. And the Second Law assertion that energy associated with systematic mechanical work tends to “degrade into heat” then corresponds to the fact that when there’s computational irreducibility the behavior that’s generated is something we can’t readily “computationally see through”—so that it appears random to us.

The Road from Ordinary Thermodynamics

Systems like rule 30 make the phenomenon of intrinsic randomness generation particularly clear. But how do such systems relate to the ones that thermodynamics usually studies? The original formulation of the Second Law involved gases, and the vast majority of its applications even today still concern things like gases.

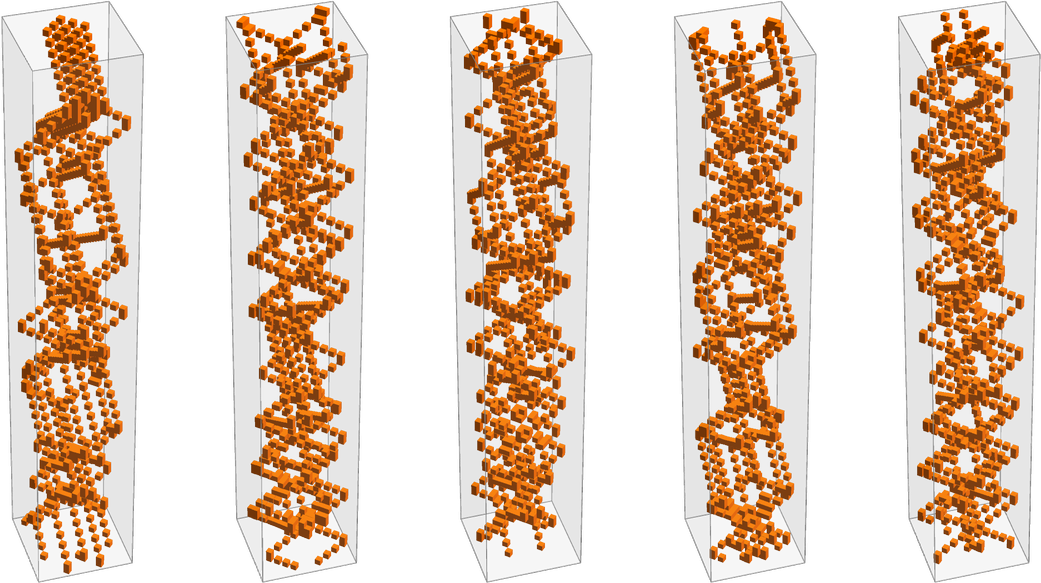

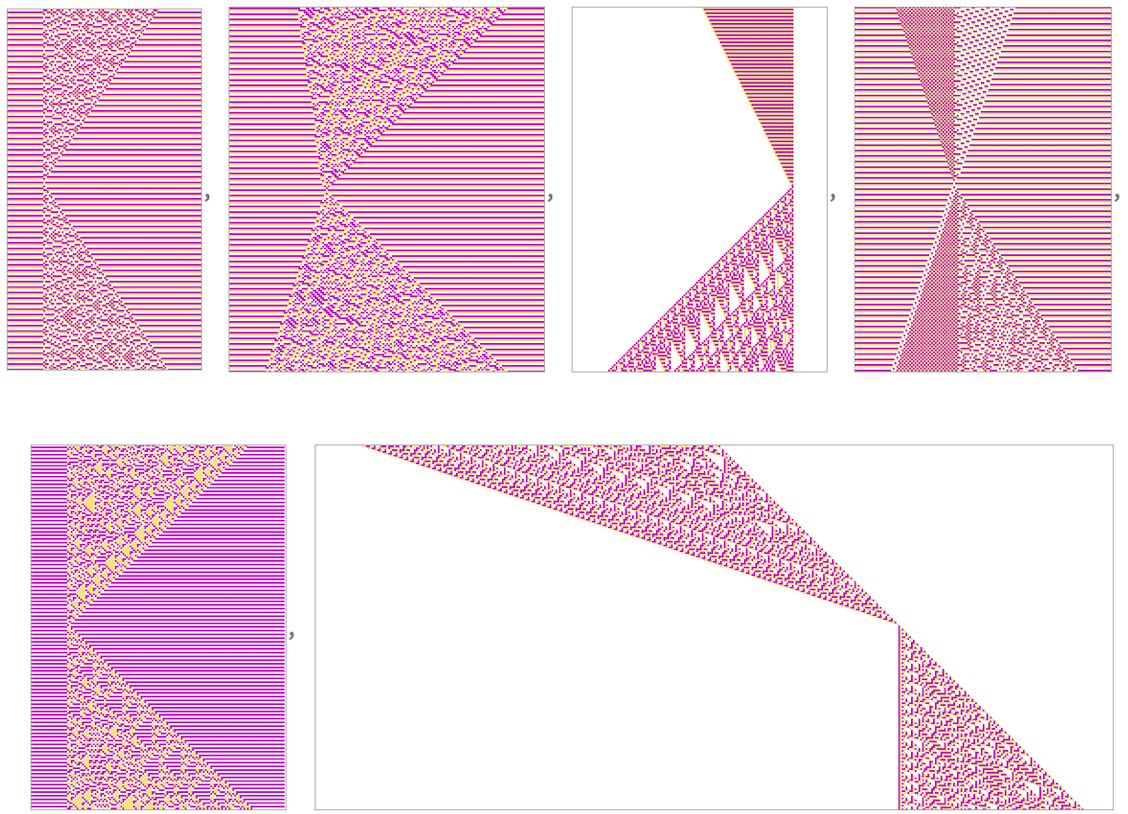

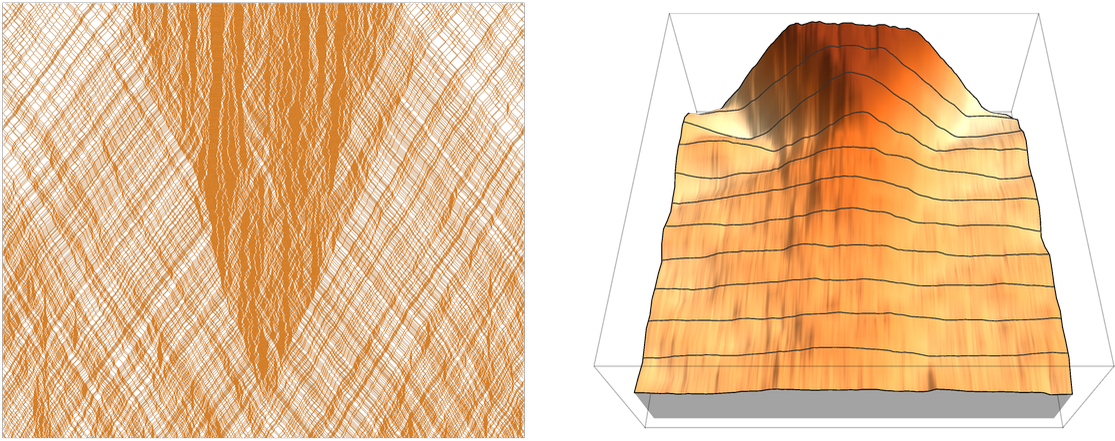

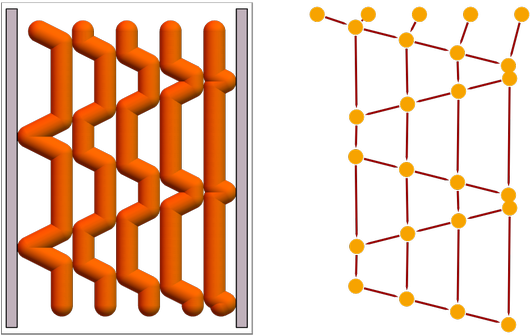

At a basic level, a typical gas consists of a collection of discrete molecules that interact through collisions. And as an idealization of this, we can consider hard spheres that move according to the standard laws of mechanics and undergo perfectly elastic collisions with each other, and with the walls of a container. Here’s an example of a sequence of snapshots from a simulation of such a system, done in 2D:

We begin with an organized “flotilla” of “molecules”, systematically going in a particular direction (and not touching, to avoid a “Newton’s cradle” many-collisions-at-a-time effect). But after these molecules collide with a wall, they quickly start to move in what seem like much more random ways. The original systematic motion is like what happens when one is “doing mechanical work”, say moving a solid object. But what we see is that—just as the Second Law implies—this motion is quickly “degraded” into disordered and seemingly random “heat-like” microscopic motion.

Here’s a “spacetime” view of the behavior:

Looking from far away, with each molecule’s spacetime trajectory shown as a slightly transparent tube, we get:

There’s already some qualitative similarity with the rule 30 behavior we saw above. But there are many detailed differences. And one of the most obvious is that while rule 30 just has a discrete collection of cells, the spheres in the hard-sphere gas can be at any position. And, what’s more, the precise details of their positions can have an increasingly large effect. If two elastic spheres collide perfectly head-on, they’ll bounce back the way they came. But as soon as they’re even slightly off center they’ll bounce back at a different angle, and if they do this repeatedly even the tiniest initial off-centeredness will be arbitrarily amplified:

And, yes, this chaos-theory-like phenomenon makes it very difficult even to do an accurate simulation on a computer with limited numerical precision. But does it actually matter to the core phenomenon of randomization that’s central to the Second Law?

To begin testing this, let’s consider not hard spheres but instead hard squares (where we assume that the squares always stay in the same orientation, and ignore the mechanical torques that would lead to spinning). If we set up the same kind of “flotilla” as before, with the edges of the squares aligned with the walls of the box, then things are symmetrical enough that we don’t see any randomization—and in fact the only nontrivial thing that happens is a little

Viewed in “spacetime” we can see the “flotilla” is just bouncing unchanged off the walls:

But remove even a tiny bit of the symmetry—here by roughly doubling the “masses” of some of the squares and “riffling” their positions (which also avoids singular multi-square collisions)—and we get:

In “spacetime” this becomes

or “from the side”:

So despite the lack of chaos-theory-like amplification behavior (or any associated loss of numerical precision in our simulations), there’s still rapid “degradation” to a certain apparent randomness.

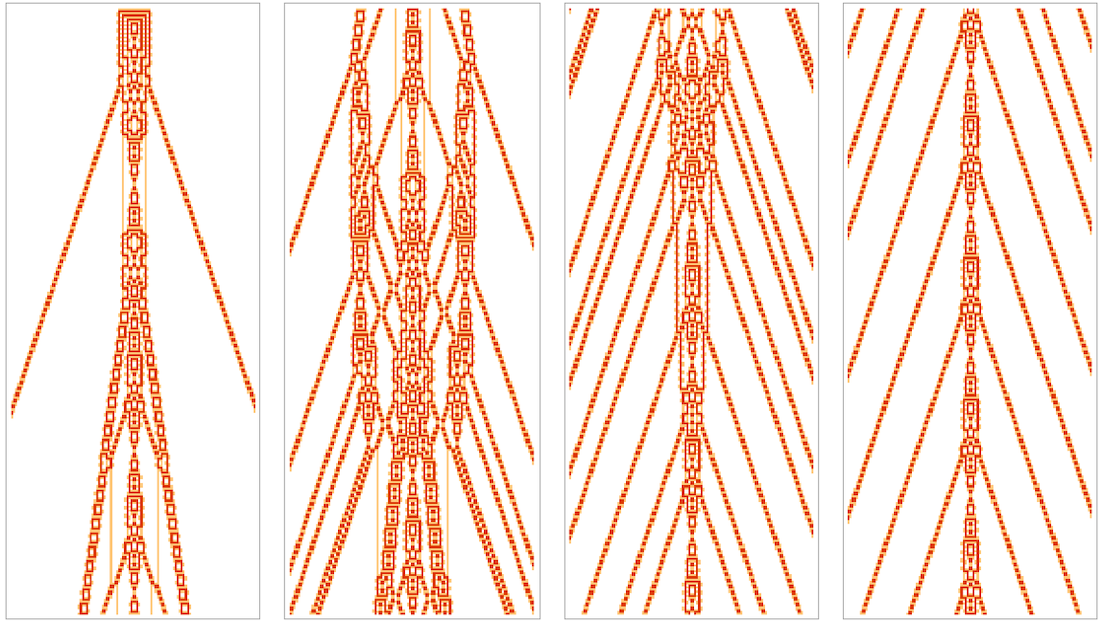

So how much further can we go? In the hard-square gas, the squares can still be at any location, and be moving at any speed in any direction. As a simpler system (that I happened to first investigate a version of nearly 50 years ago), let’s consider a discrete grid in which idealized molecules have discrete directions and are either present or not on each edge:

The system operates in discrete steps, with the molecules at each step moving or “scattering” according to the rules (up to rotations)

and interacting with the “walls” according to:

Running this starting with a “flotilla” we get on successive steps:

Or, sampling every 10 steps:

In “spacetime” this becomes (with the arrows tipped to trace out “worldlines”)

or “from the side”:

And again we see at least a certain level of “randomization”. With this model we’re getting quite close to the setup of something like rule 30. And reformulating this same model we can get even closer. Instead of having “particles” with explicit “velocity directions”, consider just having a grid in which an alternating pattern of 2×2 blocks are updated at each step according to

and the “wall” rules

as well as the “rotations” of all these rules. With this “block cellular automaton” setup, “isolated particles” move according to the ![]() rule like the pieces on a checkerboard:

rule like the pieces on a checkerboard:

A “flotilla” of particles—like equal-mass hard squares—has rather simple behavior in the “square enclosure”:

In “spacetime” this is just:

But if we add even a single fixed (“single-cell-of-wall”) “obstruction cell” (here at the very center of the box, so preserving reflection symmetry) the behavior is quite different:

In “spacetime” this becomes (with the “obstruction cell” shown in gray)

or “from the side” (with the “obstruction” sometimes getting obscured by cells in front):

As it turns out, the block cellular automaton model we’re using here is actually functionally identical to the “discrete velocity molecules” model we used above, as the correspondence of their rules indicates:

And seeing this correspondence one gets the idea of considering a “rotated container”—which no longer gives simple behavior even without any kind of “interior fixed obstruction cell”:

Here’s the corresponding “spacetime” view

and here’s what it looks like “from the side”:

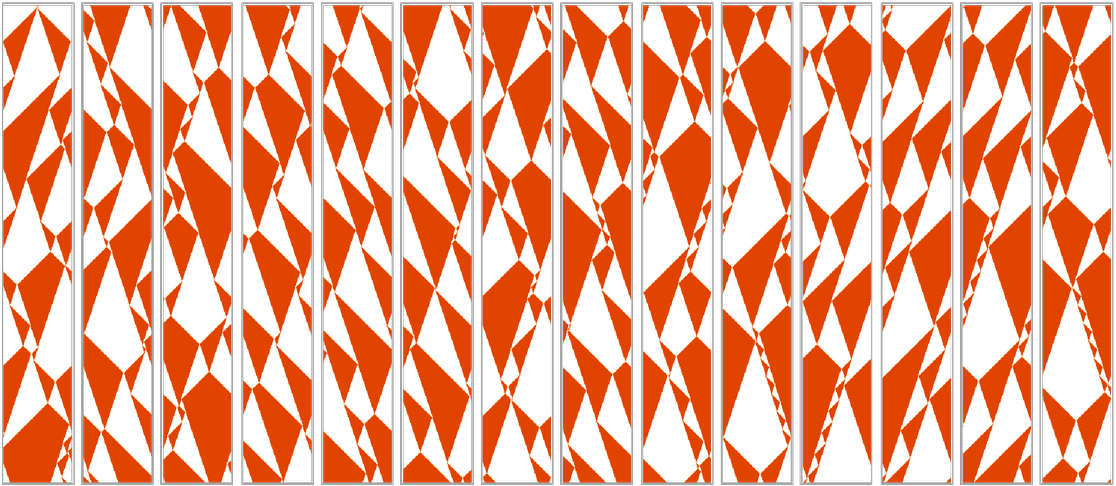

Here’s a larger version of the same setup (though no longer with exact symmetry) sampled every 50 steps:

And, yes, it’s increasingly looking as if there’s intrinsic randomness generation going on, much like in rule 30. But if we go a little further the correspondence becomes even clearer.

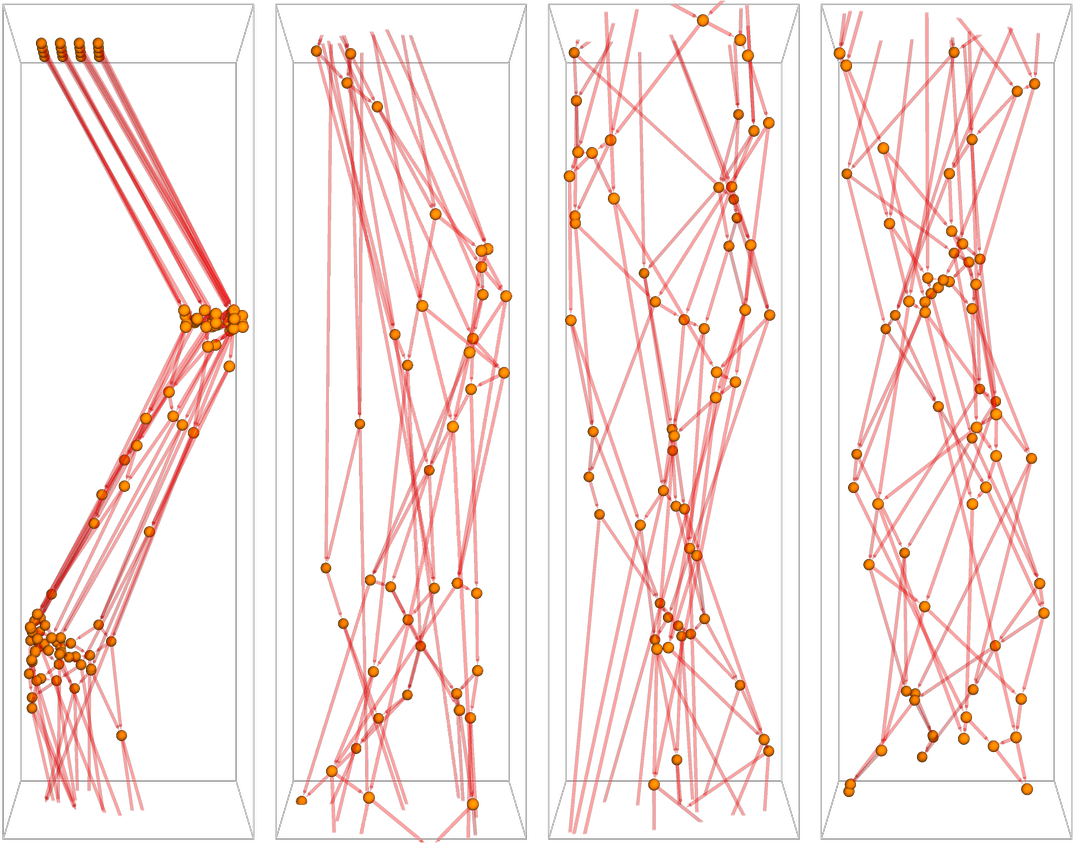

The systems we’ve been looking at so far have all been in 2D. But what if—like in rule 30—we consider 1D? It turns out we can set up very much the same kind of “gas-like” block cellular automata. Though with blocks of size 2 and two possible values for each cell, there’s only one viable rule

where in effect the only nontrivial transformation is:

(In 1D we can also make things simpler by not using explicit “walls”, but instead just wrapping the array of cells around cyclically.) Here’s then what happens with this rule with a few possible initial states:

And what we see is that in all cases the “particles” effectively just “pass through each other” without really “interacting”. But we can make there be something closer to “real interactions” by introducing another color, and adding a transformation which effectively introduces a “time delay” to each “crossover” of particles (as an alternative, one can also stay with 2 colors, and use size-3 blocks):

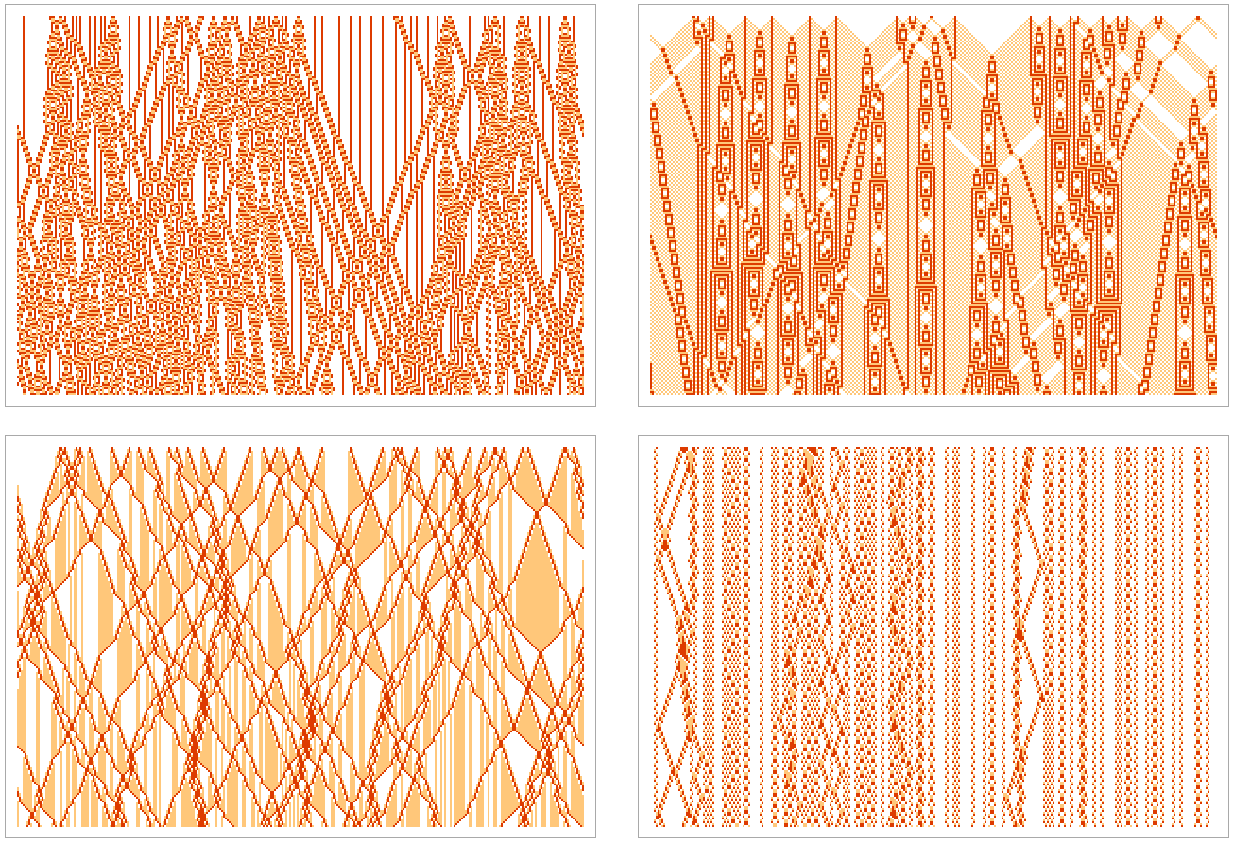

And with this “delayed particle” rule (that, as it happens, I first studied in 1986) we get:

With sufficiently simple initial conditions this still gives simple behavior, such as:

But as soon as one reaches the 121st initial condition (![]() ) one sees:

) one sees:

(As we’ll discuss below, in a finite-size region of the kind we’re using, it’s inevitable that the pattern eventually repeats, though in the particular case shown it takes 7022 steps.) Here’s a slightly larger example, in which there’s clearer “progressive degradation” of the initial condition to apparent randomness:

We’ve come quite far from our original hard-sphere “realistic gas molecules”. But there’s even further to go. With hard spheres there’s built-in conservation of energy, momentum and number of particles. And we don’t specifically have these things anymore. But the rule we’re using still does have conservation of the number of non-white cells. Dropping this requirement, we can have rules like

which gradually “fill in with particles”:

What happens if we just let this “expand into a vacuum”, without any “walls”? The behavior is complex. And as is typical when there’s computational irreducibility, it’s at first hard to know what will happen in the end. For this particular initial condition everything becomes essentially periodic (with period 70) after 979 steps:

But with a slightly different initial condition, it seems to have a good chance of growing forever:

With slightly different rules (that here happen not be left-right symmetric) we start seeing rapid “expansion into the vacuum”—basically just like rule 30:

The whole setup here is very close to what it is for rule 30. But there’s one more feature we’ve carried over here from our hard-sphere gas and other models. Just like in standard classical mechanics, every part of the underlying rule is reversible, in the sense that if the rule says that block u goes to block v it also says that block v goes to block u.

Rules like

remove this restriction but produce behavior that’s qualitatively no different from the reversible rules above.

But now we’ve got to systems that are basically set up just like rule 30. (They happen to be block cellular automata rather than ordinary ones, but that really doesn’t matter.) And, needless to say, being set up like rule 30 it shows the same kind of intrinsic randomness generation that we see in a system like rule 30.

We started here from a “physically realistic” hard-sphere gas model—which we’ve kept on simplifying and idealizing. And what we’ve found is that through all this simplification and idealization, the same core phenomenon has remained: that even starting from “simple” or “ordered” initial conditions, complex and “apparently random” behavior is somehow generated, just like it is in typical Second Law behavior.

At the outset we might have assumed that to get this kind of “Second Law behavior” would need at least quite a few features of physics. But what we’ve discovered is that this isn’t the case. And instead we’ve got evidence that the core phenomenon is much more robust and in a sense purely computational.

Indeed, it seems that as soon as there’s computational irreducibility in a system, it’s basically inevitable that we’ll see the phenomenon. And since from the Principle of Computational Equivalence we expect that computational irreducibility is ubiquitous, the core phenomenon of the Second Law will in the end be ubiquitous across a vast range of systems, from things like hard-sphere gases to things like rule 30.

Reversibility, Irreversibility and Equilibrium

Our typical everyday experience shows a certain fundamental irreversibility. An egg can readily be scrambled. But you can’t easily reverse that: it can’t readily be unscrambled. And indeed this kind of one-way transition from order to disorder—but not back—is what the Second Law is all about. But there’s immediately something mysterious about this. Yes, there’s irreversibility at the level of things like eggs. But if we drill down to the level of atoms, the physics we know says there’s basically perfect reversibility. So where is the irreversibility coming from? This is a core (and often confused) question about the Second Law, and in seeing how it resolves we will end up face to face with fundamental issues about the character of observers and their relationship to computational irreducibility.

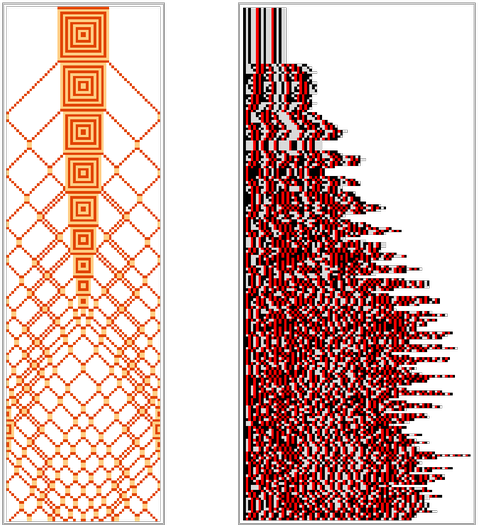

A “particle cellular automaton” like the one from the previous section

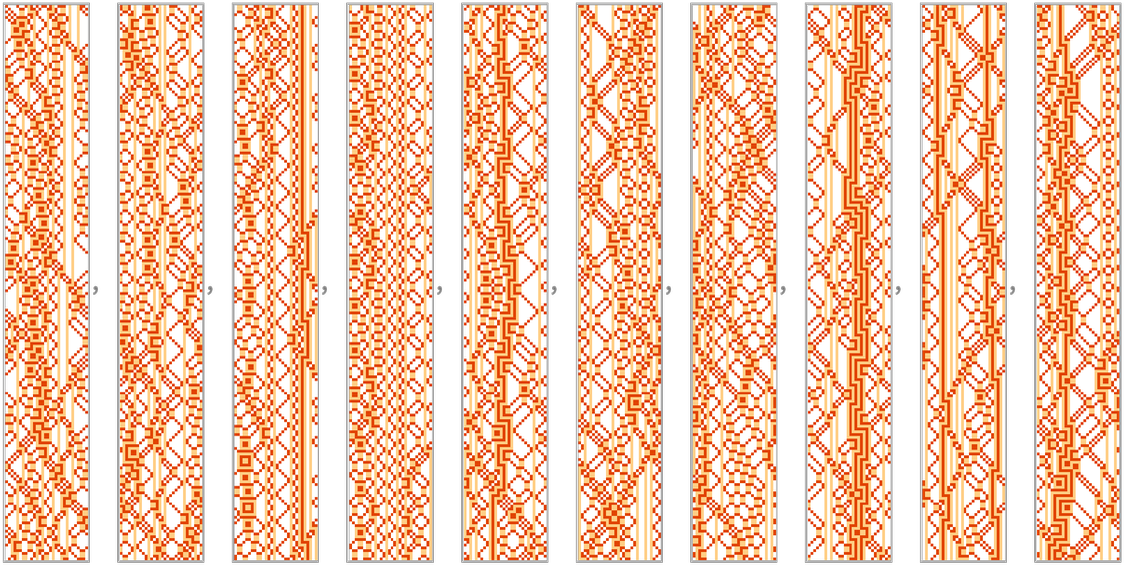

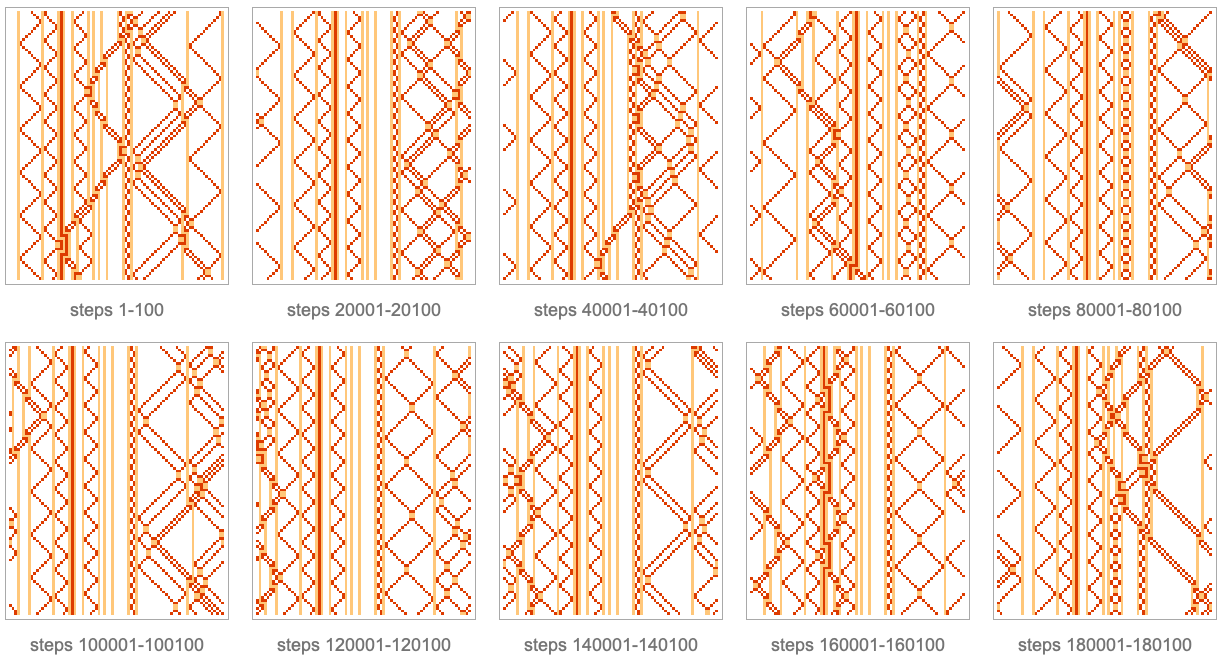

has transformations that “go both ways”, making its rule perfectly reversible. Yet we saw above that if we start from a “simple initial condition” and then just run the rule, it will “produce increasing randomness”. But what if we reverse the rule, and run it backwards? Well, since the rule is reversible, the same thing must happen: we must get increasing randomness. But how can it be that “randomness increases” both going forward in time and going backward? Here’s a picture that shows what’s going on:

In the middle the system takes on a “simple state”. But going either forward or backward it “randomizes”. The second half of the evolution we can interpret as typical Second-Law-style “degradation to randomness”. But what about the first half? Something unexpected is happening here. From what seems like a “rather random” initial state, the system appears to be “spontaneously organizing itself” to produce—at least temporarily—a simple and “orderly” state. An initial “scrambled” state is spontaneously becoming “unscrambled”. In the setup of ordinary thermodynamics, this would be a kind of “anti-thermodynamic” behavior in which what seems like “random heat” is spontaneously generating “organized mechanical work”.

So why isn’t this what we see happening all the time? Microscopic reversibility guarantees that in principle it’s possible. But what leads to the observed Second Law is that in practice we just don’t normally end up setting up the kind of initial states that give “anti-thermodynamic” behavior. We’ll be talking at length below about why this is. But the basic point is that to do so requires more computational sophistication than we as computationally bounded observers can muster. If the evolution of the system is computationally irreducible, then in effect we have to invert all of that computationally irreducible work to find the initial state to use, and that’s not something that we—as computationally bounded observers—can do.

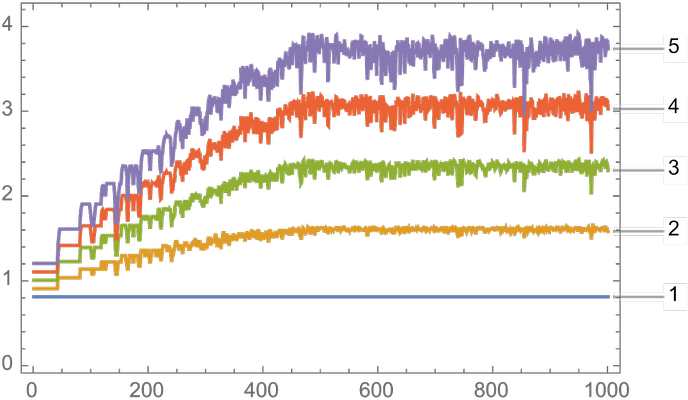

But before we talk more about this, let’s explore some of the consequences of the basic setup we have here. The most obvious aspect of the “simple state” in the middle of the picture above is that it involves a big blob of “adjacent particles”. So now here’s a plot of the “size of the biggest blob that’s present” as a function of time starting from the “simple state”:

The plot indicates that—as the picture above indicates—the “specialness” of the initial state quickly “decays” to a “typical state” in which there aren’t any large blobs present. And if we were watching the system at the beginning of this plot, we’d be able to “use the Second Law” to identify a definite “arrow of time”: later times are the ones where the states are “more disordered” in the sense that they only have smaller blobs.

There are many subtleties to all of this. We know that if we set up an “appropriately special” initial state we can get anti-thermodynamic behavior. And indeed for the whole picture above—with its “special initial state”—the plot of blob size vs. time looks like this, with a symmetrical peak “developing” in the middle:

We’ve “made this happen” by setting up “special initial conditions”. But can it happen “naturally”? To some extent, yes. Even away from the peak, we can see there are always little fluctuations: blobs being formed and destroyed as part of the evolution of the system. And if we wait long enough we may see a fairly large blob. Like here’s one that forms (and decays) after about 245,400 steps:

The actual structure this corresponds to is pretty unremarkable:

But, OK, away from the “special state”, what we see is a kind of “uniform randomness”, in which, for example, there’s no obvious distinction between forward and backward in time. In thermodynamic terms, we’d describe this as having “reached equilibrium”—a situation in which there’s no longer “obvious change”.

To be fair, even in “equilibrium”, there will always be “fluctuations”. But for example in the system we’re looking at here, “fluctuations” corresponding to progressively larger blobs tend to occur exponentially less frequently. So it’s reasonable to think of there being an “equilibrium state” with certain unchanging “typical properties”. And, what’s more, that state is the basic outcome from any initial condition. Whatever special characteristics might have been present in the initial state will tend to be degraded away, leaving only the generic “equilibrium state”.

One might think that the possibility of such an “equilibrium state” showing “typical behavior” would be a specific feature of microscopically reversible systems. But this isn’t the case. And much as the core phenomenon of the Second Law is actually something computational that’s deeper and more general than the specifics of particular physical systems, so also this is true of the core phenomenon of equilibrium. And indeed the presence of what we might call “computational equilibrium” turns out to be directly connected to the overall phenomenon of computational irreducibility.

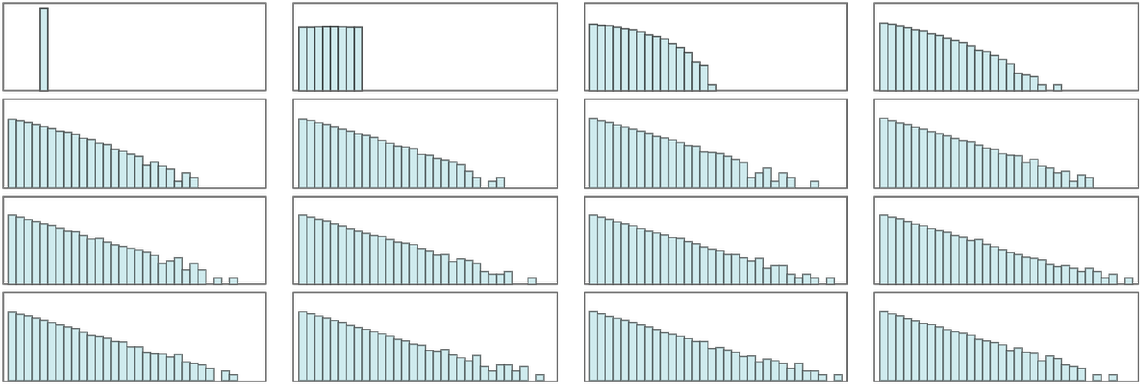

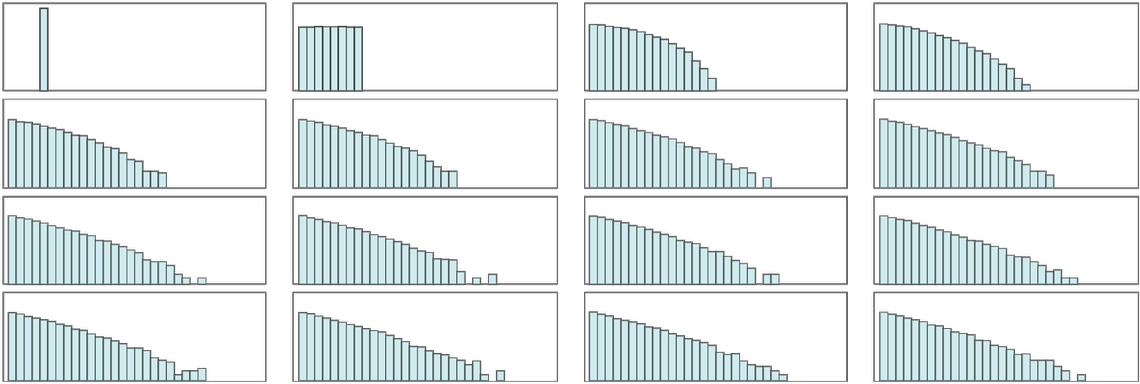

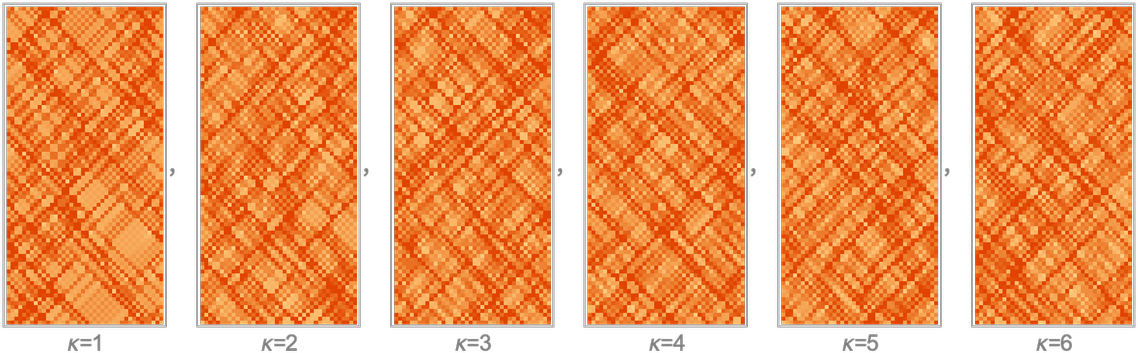

Let’s look again at rule 30. We start it off with different initial states, but in each case it quickly evolves to look basically the same:

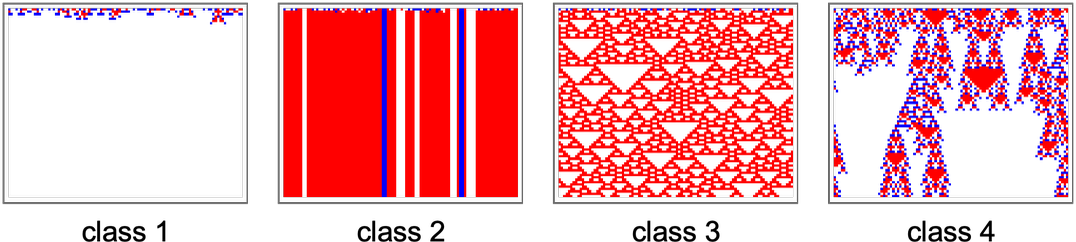

Yes, the details of the patterns that emerge depend on the initial conditions. But the point is that the overall form of what’s produced is always the same: the system has reached a kind of “computational equilibrium” whose overall features are independent of where it came from. Later, we’ll see that the rapid emergence of “computational equilibrium” is characteristic of what I long ago identified as “class 3 systems”—and it’s quite ubiquitous to systems with a wide range of underlying rules, microscopically reversible or not.

That’s not to say that microscopic reversibility is irrelevant to “Second-Law-like” behavior. In what I called class 1 and class 2 systems the force of irreversibility in the underlying rules is strong enough that it overcomes computational irreducibility, and the systems ultimately evolve not to a “computational equilibrium” that looks random but rather to a definite, predictable end state:

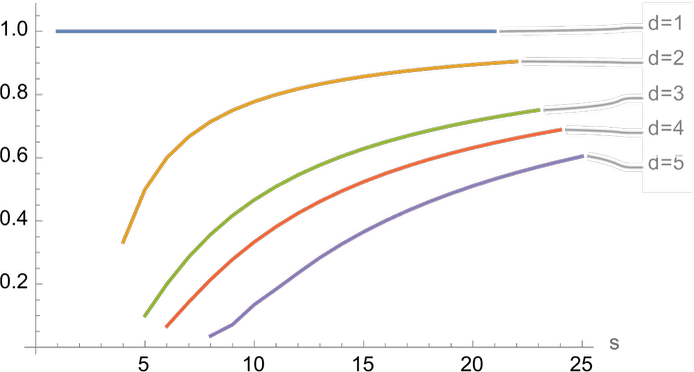

How common is microscopic reversibility? In some types of rules it’s basically always there, by construction. But in other cases microscopically reversible rules represent just a subset of possible rules of a given type. For example, for block cellular automata with k colors and blocks of size b, there are altogether (kb)kb possible rules, of which kb! are reversible (i.e. of all mappings between possible blocks, only those that are permutations correspond to reversible rules). Among reversible rules, some—like the particle cellular automaton rule above—are “self-inverses”, in the sense that the forward and backward versions of the rule are the same.

But a rule like this is still reversible

and there’s still a straightforward backward rule, but it’s not exactly the same as the forward rule:

Using the backward rule, we can again construct an initial state whose forward evolution seems “anti-thermodynamic”, but the detailed behavior of the whole system isn’t perfectly symmetric between forward and backward in time:

Basic mechanics—like for our hard-sphere gas—is reversible and “self-inverse”. But it’s known that in particle physics there are small deviations from time reversal invariance, so that the rules are not precisely self-inverse—though they are still reversible in the sense that there’s always both a unique successor and a unique predecessor to every state (and indeed in our Physics Project such reversibility is probably guaranteed to exist in the laws of physics assumed by any observer who “believes they are persistent in time”).

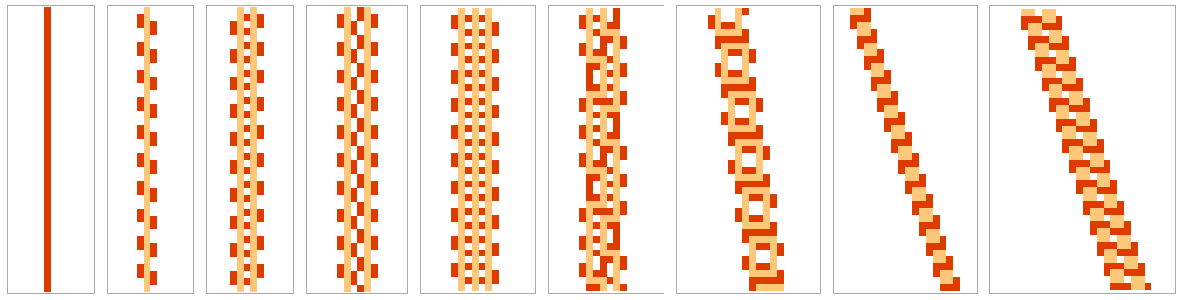

For block cellular automata it’s very easy to determine from the underlying rule whether the system is reversible (just look to see if the rule serves only to permute the blocks). But for something like an ordinary cellular automaton it’s more difficult to determine reversibility from the rule (and above one dimension the question of reversibility can actually be undecidable). Among the 256 2-color nearest-neighbor rules there are only 6 reversible examples, and they are all trivial. Among the 134,217,728 3-color nearest-neighbor rules, 1800 are reversible. Of the 82 of these rules that are self-inverse, all are trivial. But when the inverse rules are different, the behavior can be nontrivial:

Note that unlike with block cellular automata the inverse rule often involves a larger neighborhood than the forward rule. (So, for example, here 396 rules have r = 1 inverses, 612 have r = 2, 648 have r = 3 and 144 have r = 4.)

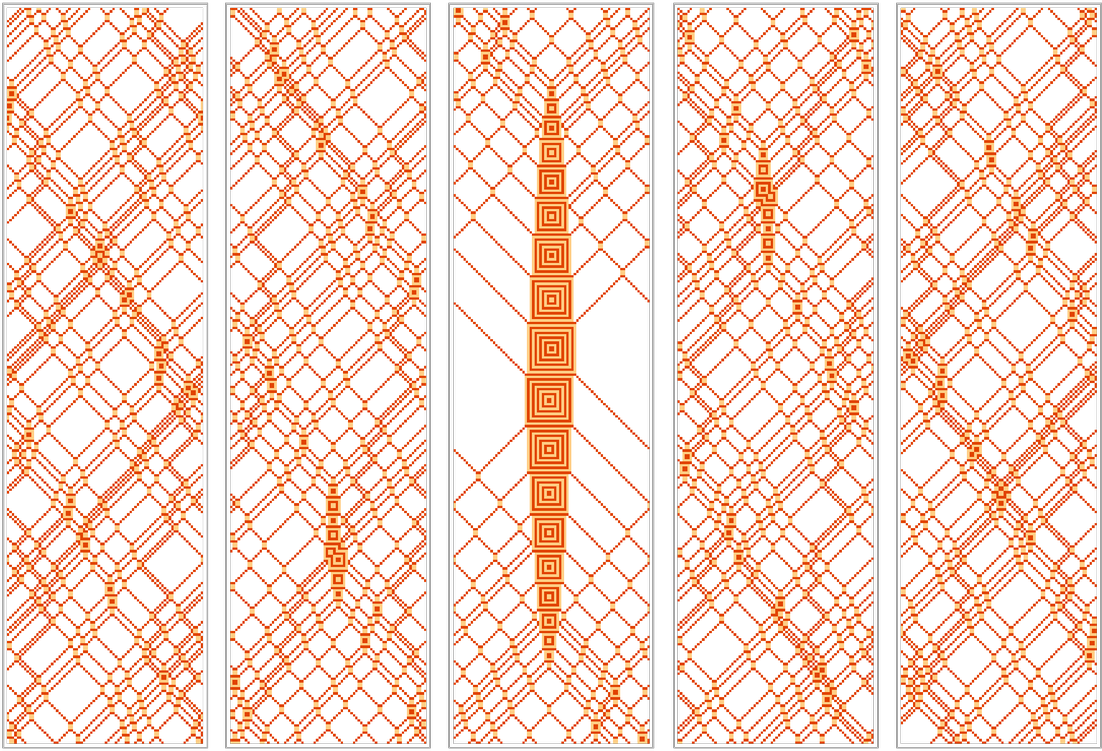

A notable variant on ordinary cellular automata are “second-order” ones, in which the value of a cell depends on its value two steps in the past:

With this approach, one can construct reversible second-order variants of all 256 “elementary cellular automata”:

Note that such second-order rules are equivalent to 4-color first-order nearest-neighbor rules:

Ergodicity and Global Behavior

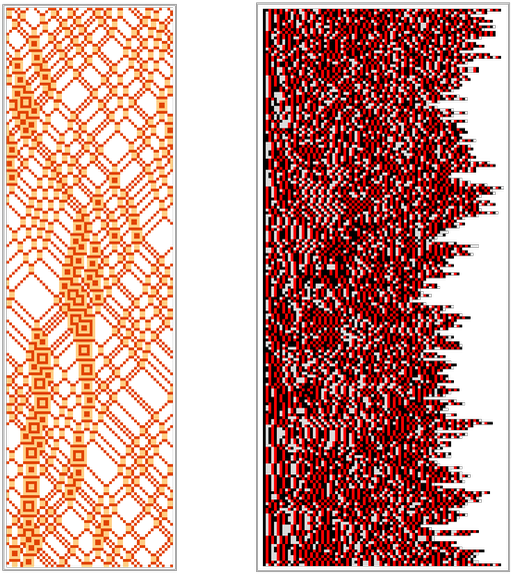

Whenever there’s a system with deterministic rules and a finite total number of states, it’s inevitable that the evolution of the system will eventually repeat. Sometimes the repetition period—or “recurrence time”—will be fairly short

and sometimes it’s much longer:

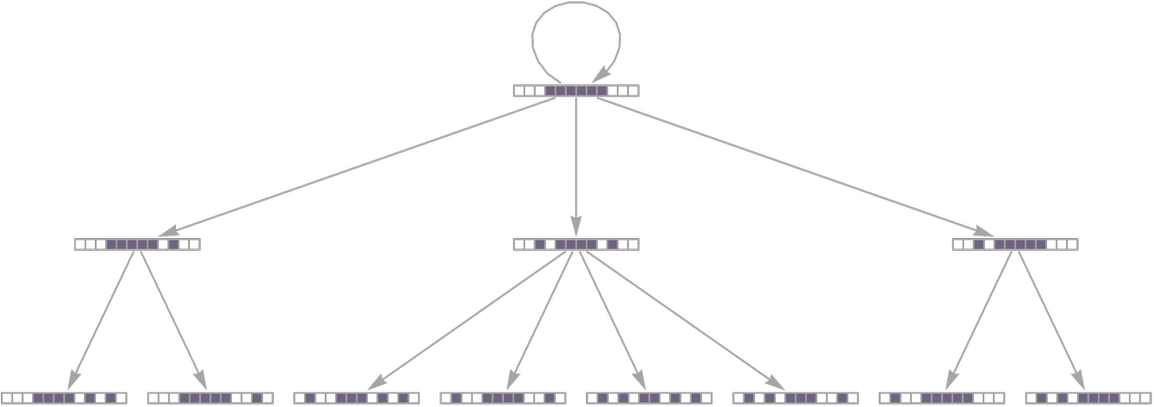

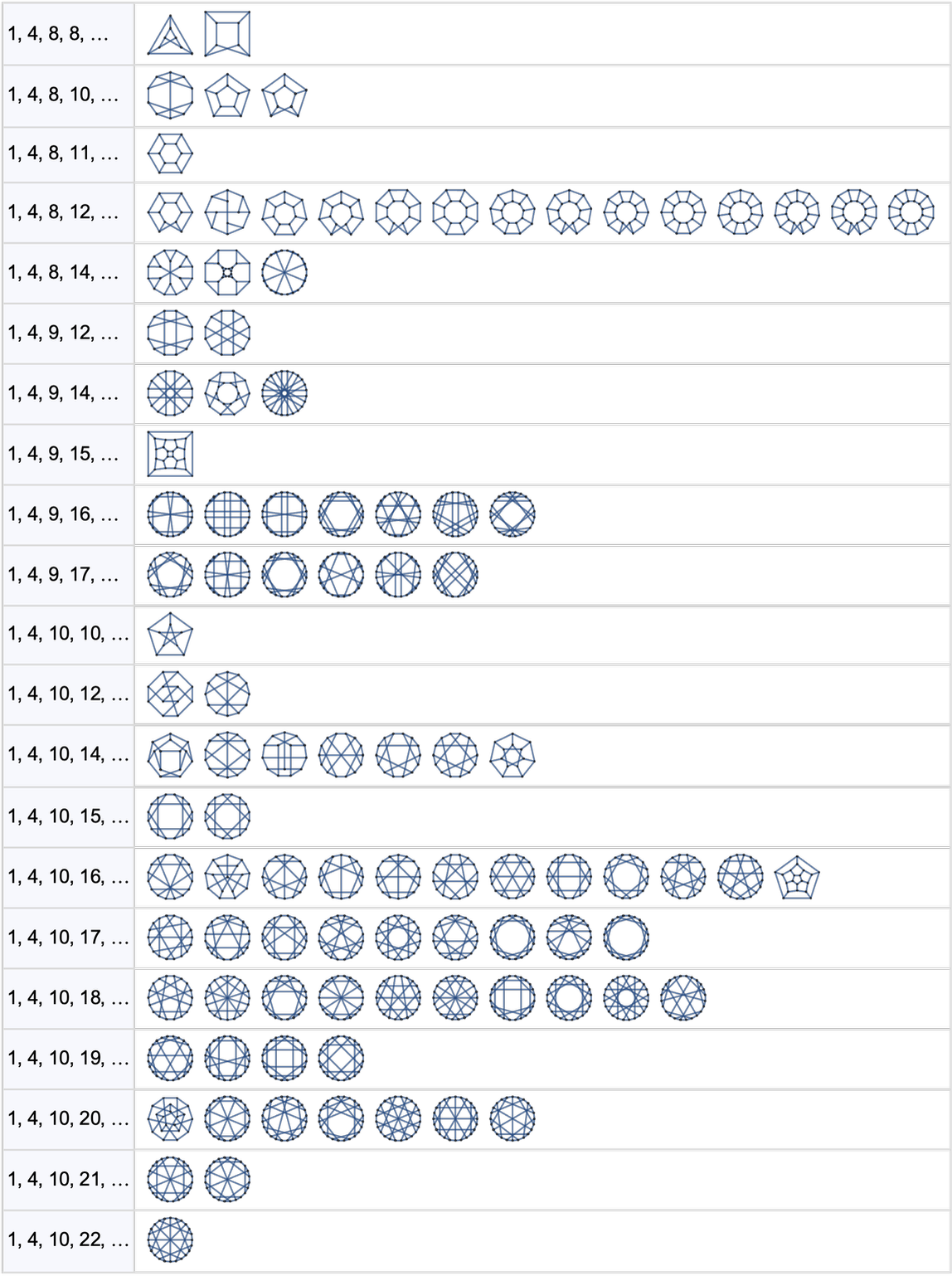

In general we can make a state transition graph that shows how each possible state of the system transitions to another under the rules. For a reversible system this graph consists purely of cycles in which each state has a unique successor and a unique predecessor. For a size-4 version of the system we’re studying here, there are a total of 2 ✕ 34 = 162 possible states (the factor 2 comes from the even/odd “phases” of the block cellular automaton)—and the state transition graph for this system is:

For a non-reversible system—like rule 30—the state transition graph (here shown for sizes 4 and 8) also includes “transient trees” of states that can be visited only once, on the way to a cycle:

In the past one of the key ideas for the origin of Second-Law-like behavior was ergodicity. And in the discrete-state systems we’re discussing here the definition of perfect ergodicity is quite straightforward: ergodicity just implies that the state transition graph must consist not of many cycles, but instead purely of one big cycle—so that whatever state one starts from, one’s always guaranteed to eventually visit every possible other state.

But why is this relevant to the Second Law? Well, we’ve said that the Second Law is about “degradation” from “special states” to “typical states”. And if one’s going to “do the ergodic thing” of visiting all possible states, then inevitably most of the states we’ll at least eventually pass through will be “typical”.

But on its own, this definitely isn’t enough to explain “Second-Law behavior” in practice. In an example like the following, one sees rapid “degradation” of a simple initial state to something “random” and “typical”:

But of the 2 ✕ 380 ≈ 1038 possible states that this system would eventually visit if it were ergodic, there are still a huge number that we wouldn’t consider “typical” or “random”. For example, just knowing that the system is eventually ergodic doesn’t tell one that it wouldn’t start off by painstakingly “counting down” like this, “keeping the action” in a tightly organized region:

So somehow there’s more than ergodicity that’s needed to explain the “degradation to randomness” associated with “typical Second-Law behavior”. And, yes, in the end it’s going to be a computational story, connected to computational irreducibility and its relationship to observers like us. But before we get there, let’s talk some more about “global structure”, as captured by things like state transition diagrams.

Consider again the size-4 case above. The rules are such that they conserve the number of “particles” (i.e. non-white cells). And this means that the states of the system necessarily break into separate “sectors” for different particle numbers. But even with a fixed number of particles, there are typically quite a few distinct cycles:

The system we’re using here is too small for us to be able to convincingly identify “simple” versus “typical” or “random” states, though for example we can see that only a few of the cycles have the simplifying feature of left-right symmetry.

Going to size 6 one begins to get a sense that there are some “always simple” cycles, as well as others that involve “more typical” states:

At size 10 the state transition graph for “4-particle” states has the form

and the longer cycles are:

It’s notable that most of the longest (“closest-to-ergodicity”) cycles look rather “simple and deliberate” all the way through. The “more typical and random” behavior seems to be reserved here for shorter cycles.

But in studying “Second Law behavior” what we’re mostly interested in is what happens from initially orderly states. Here’s an example of the results for progressively larger “blobs” in a system of size 30:

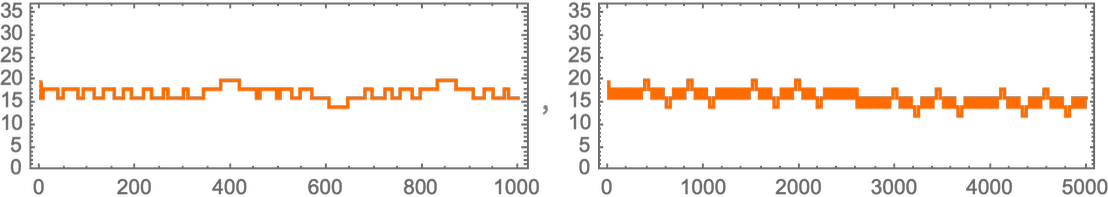

To get some sense of how the “degradation to randomness” proceeds, we can plot how the maximum blob size evolves in each case:

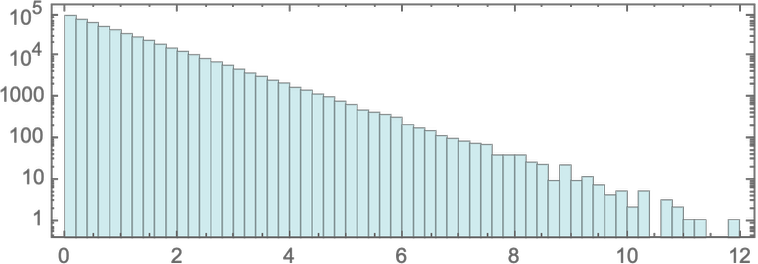

For some of the initial conditions one sees “thermodynamic-like” behavior, though quite often it’s overwhelmed by “freezing”, fluctuations, recurrences, etc. In all cases the evolution must eventually repeat, but the “recurrence times” vary widely (the longest—for a width-13 initial blob—being 861,930):

Let’s look at what happens in these recurrences, using as an example a width-17 initial blob—whose evolution begins:

As the picture suggests, the initial “large blob” quickly gets at least somewhat degraded, though there continue to be definite fluctuations visible:

If one keeps going long enough, one reaches the recurrence time, which in this case is 155,150 steps. Looking at the maximum blob size through a “whole cycle” one sees many fluctuations:

Most are small—as illustrated here with ordinary and logarithmic histograms:

But some are large. And for example at half the full recurrence time there is a fluctuation

that involves an “emergent blob” as wide as in the initial condition—that altogether lasts around 280 steps:

There are also “runner-up” fluctuations with various forms—that reach “blob width 15” and occur more or less equally spaced throughout the cycle:

It’s notable that clear Second-Law-like behavior occurs even in a size-30 system. But if we go, say, to a size-80 system it becomes even more obvious

and one sees rapid and systematic evolution towards an “equilibrium state” with fairly small fluctuations:

It’s worth mentioning again that the idea of “reaching equilibrium” doesn’t depend on the particulars of the rule we’re using—and in fact it can happen more rapidly in other reversible block cellular automata where there are no “particle conservation laws” to slow things down:

In such rules there also tend to be fewer, longer cycles in the state transition graph, as this comparison for size 6 with the “delayed particle” rule suggests:

But it’s important to realize that the “approach to equilibrium” is its own—computational—phenomenon, not directly related to long cycles and concepts like ergodicity. And indeed, as we mentioned above, it also doesn’t depend on built-in reversibility in the rules, so one sees it even in something like rule 30:

How Random Does It Get?

At an everyday level, the core manifestation of the Second Law is the tendency of things to “degrade” to randomness. But just how random is the randomness? One might think that anything that is made by a simple-to-describe algorithm—like the pattern of rule 30 or the digits of π—shouldn’t really be considered “random”. But for the purpose of understanding our experience of the world what matters is not what is “happening underneath” but instead what our perception of it is. So the question becomes: when we see something produced, say by rule 30 or by π, can we recognize regularities in it or not?

And in practice what the Second Law asserts is that systems will tend to go from states where we can recognize regularities to ones where we cannot. And the point is that this phenomenon is something ubiquitous and fundamental, arising from core computational ideas, in particular computational irreducibility.

But what does it mean to “recognize regularities”? In essence it’s all about seeing if we can find succinct ways to summarize what we see—or at least the aspects of what we see that we care about. In other words, what we’re interested in is finding some kind of compressed representation of things. And what the Second Law is ultimately about is saying that even if compression works at first, it won’t tend to keep doing so.

As a very simple example, let’s consider doing compression by essentially “representing our data as a sequence of blobs”—or, more precisely, using run-length encoding to represent sequences of 0s and 1s in terms of lengths of successive runs of identical values. For example, given the data

we split into runs of identical values

then as a “compressed representation” just give the length of each run

which we can finally encode as a sequence of binary numbers with base-3 delimiters:

“Transforming” our “particle cellular automaton” in this way we get:

The “simple” initial conditions here are successfully compressed, but the later “random” states are not. Starting from a random initial condition, we don’t see any significant compression at all:

What about other methods of compression? A standard approach involves looking at blocks of successive values on a given step, and asking about the relative frequencies with which different possible blocks occur. But for the particular rule we are discussing here, there’s immediately an issue. The rule conserves the total number of non-white cells—so at least for size-1 blocks the frequency of such blocks will always be what it was for the initial conditions.

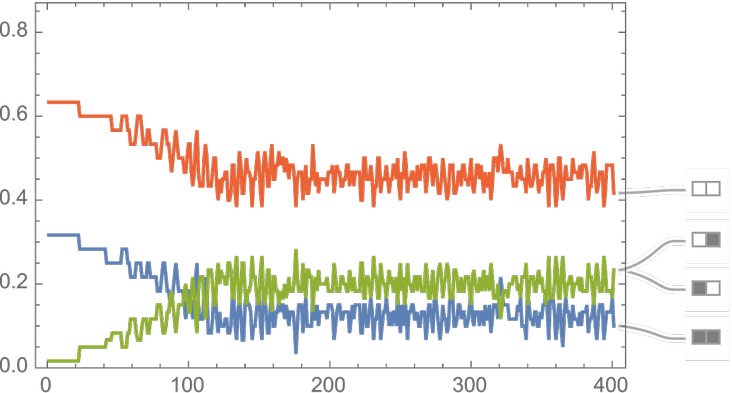

What about for larger blocks? This gives the evolution of relative frequencies of size-2 blocks starting from the simple initial condition above:

Arranging for exactly half the cells to be non-white, the frequencies of size-2 block converge towards equality:

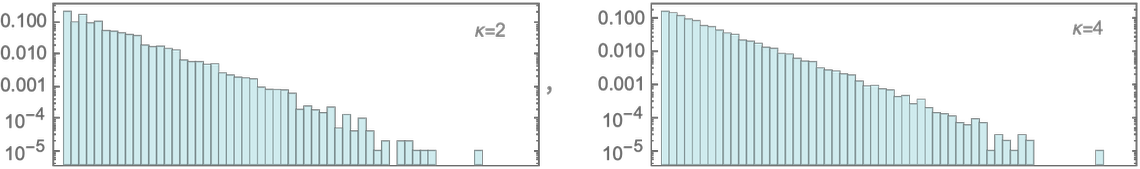

In general, the presence of unequal frequencies for different blocks allows the possibility of compression: much like in Morse code, one just has to use shorter codewords for more frequent blocks. How much compression is ultimately possible in this way can be found by computing –Σpi log pi for the probabilities pi of all blocks of a given length, which we see quickly converge to constant “equilibrium” values:

In the end we know that the initial conditions were “simple” and “special”. But the issue is whether whatever method we use for compression or for recognizing regularities is able to pick up on this. Or whether somehow the evolution of the system has sufficiently “encoded” the information about the initial condition that it’s no longer detectable. Clearly if our “method of compression” involved explicitly running the evolution of the system backwards, then it’d be possible to pick out the special features of the initial conditions. But explicitly running the evolution of the system requires doing lots of computational work.

So in a sense the question is whether there’s a shortcut. And, yes, one can try all sorts of methods from statistics, machine learning, cryptography and so on. But so far as one can tell, none of them make any significant progress: the “encoding” associated with the evolution of the system seems to just be too strong to “break”. Ultimately it’s hard to know for sure that there’s no scheme that can work. But any scheme must correspond to running some program. So a way to get a bit more evidence is just to enumerate “possible compression programs” and see what they do.

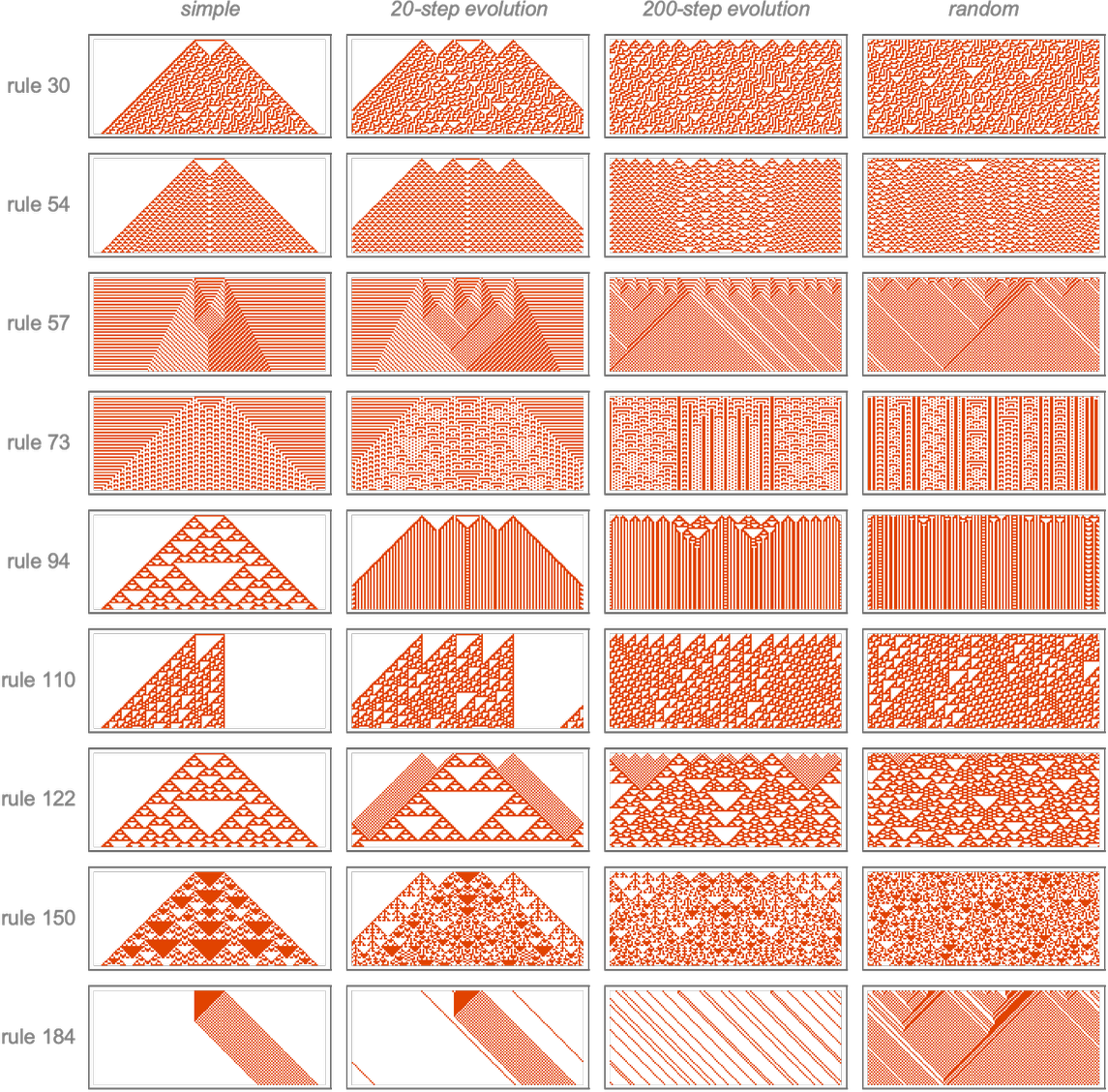

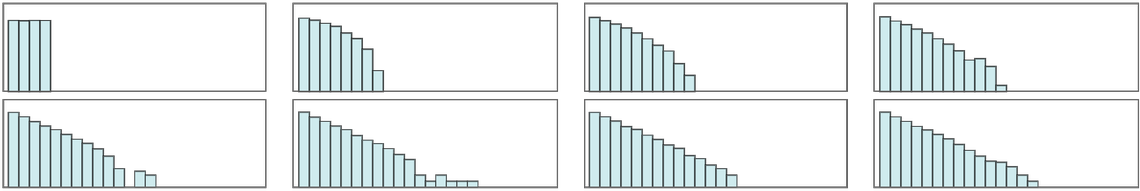

In particular, we can for example enumerate simple cellular automata, and see whether when run they produce “obviously different” results. Here’s what happens for a collection of different cellular automata when they are applied to a “simple initial condition”, to states obtained after 20 and 200 steps of evolution according to the particle cellular automaton rule and to an independently random state:

And, yes, in many cases the simple initial condition leads to “obviously different behavior”. But there’s nothing obviously different about the behavior obtained in the last two cases. Or, in other words, at least programs based on these simple cellular automata don’t seem to be able to “decode” the different origins of the third and fourth cases shown here.

What does all this mean? The fundamental point is that there seems to be enough computational irreducibility in the evolution of the system that no computationally bounded observer can “see through it”. And so—at least as far as a computationally bounded observer is concerned—“specialness” in the initial conditions is quickly “degraded” to an “equilibrium” state that “seems random”. Or, in other words, the computational process of evolution inevitably seems to lead to the core phenomenon of the Second Law.

The Concept of Entropy

“Entropy increases” is a common statement of the Second Law. But what does this mean, especially in our computational context? The answer is somewhat subtle, and understanding it will put us right back into questions of the interplay between computational irreducibility and the computational boundedness of observers.

When it was first introduced in the 1860s, entropy was thought of very much like energy, and was computed from ratios of heat content to temperature. But soon—particularly through work on gases by Boltzmann—there arose a quite different way of computing (and thinking about) entropy: in terms of the log of the number of possible states of a system. Later we’ll discuss the correspondence between these different ideas of entropy. But for now let’s consider what I view as the more fundamental definition based on counting states.

In the early days of entropy, when one imagined that—like in the cases of the hard-sphere gas—the parameters of the system were continuous, it could be mathematically complex to tease out any kind of discrete “counting of states”. But from what we’ve discussed here, it’s clear that the core phenomenon of the Second Law doesn’t depend on the presence of continuous parameters, and in something like a cellular automaton it’s basically straightforward to count discrete states.

But now we have to get more careful about our definition of entropy. Given any particular initial state, a deterministic system will always evolve through a series of individual states—so that there’s always only one possible state for the system, which means the entropy will always be exactly zero. (This is a lot muddier and more complicated when continuous parameters are considered, but in the end the conclusion is the same.)

So how do we get a more useful definition of entropy? The key idea is to think not about individual states of a system but instead about collections of states that we somehow consider “equivalent”. In a typical case we might imagine that we can’t measure all the detailed positions of molecules in a gas, so we look just at “coarse-grained” states in which we consider, say, only the number of molecules in particular overall bins or blocks.

The entropy can be thought of as counting the number of possible microscopic states of the system that are consistent with some overall constraint—like a certain number of particles in each bin. If the constraint talks specifically about the position of every particle, there’ll only be one microscopic state consistent with the constraints, and the entropy will be zero. But if the constraint is looser, there’ll often be many possible microscopic states consistent with it, and the entropy we define will be nonzero.

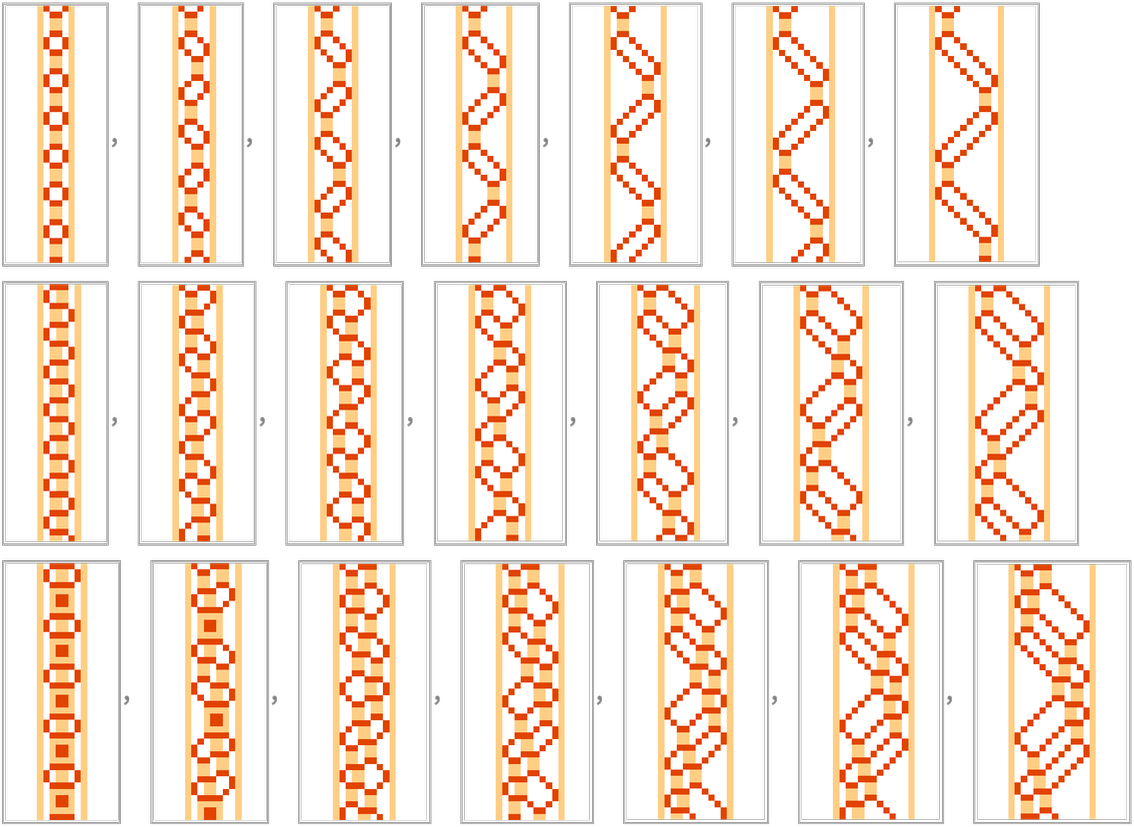

Let’s look at this in the context of our particle cellular automaton. Here’s a particular evolution, starting from a specific microscopic state, together with a sequence of “coarse grainings” of this evolution in which we keep track only of “overall particle density” in progressively larger blocks:

The very first “coarse graining” here is particularly trivial: all it’s doing is to say whether a “particle is present” or not in each cell—or, in other words, it’s showing every particle but ignoring whether it’s “light” or “dark”. But in making this and the other coarse-grained pictures we’re always starting from the single “underlying microscopic evolution” that’s shown and just “adding coarse graining after the fact”.

But what if we assume that all we ever know about the system is a coarse-grained version? Say we look at the “particle-or-not” case. At a coarse-grained level the initial condition just says there are 6 particles present. But it doesn’t say if each particle is light or dark, and actually there are 26 = 64 possible microscopic configurations. And the point is that each of these microscopic configurations has its own evolution:

But now we can consider coarse graining things. All 64 initial conditions are—by construction—equivalent under particle-or-not coarse graining:

But after just one step of evolution, different initial “microstates” can lead to different coarse-grained evolutions:

In other words, a single coarse-grained initial condition “spreads out” after just one step to several coarse-grained states:

After another step, a larger number of coarse-grained states are possible:

And in general the number of distinct coarse-grained states that can be reached grows fairly rapidly at first, though soon saturates, showing just fluctuations thereafter:

But the coarse-grained entropy is basically just proportional to the log of this quantity, so it too will show rapid growth at first, eventually leveling off at an “equilibrium” value.

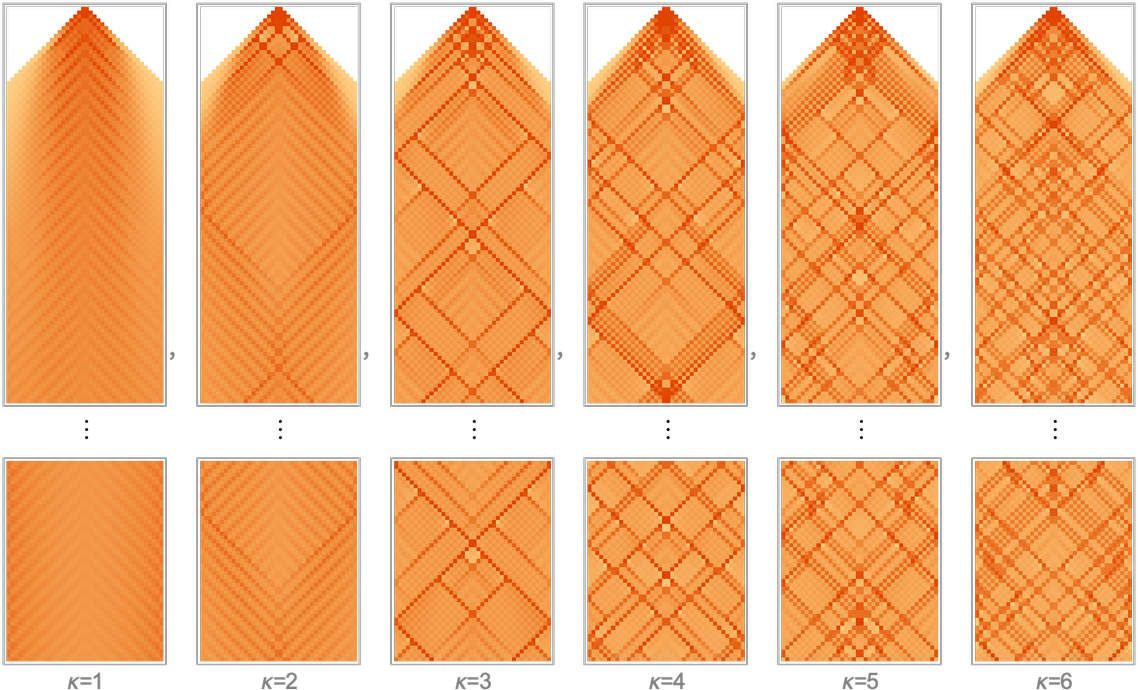

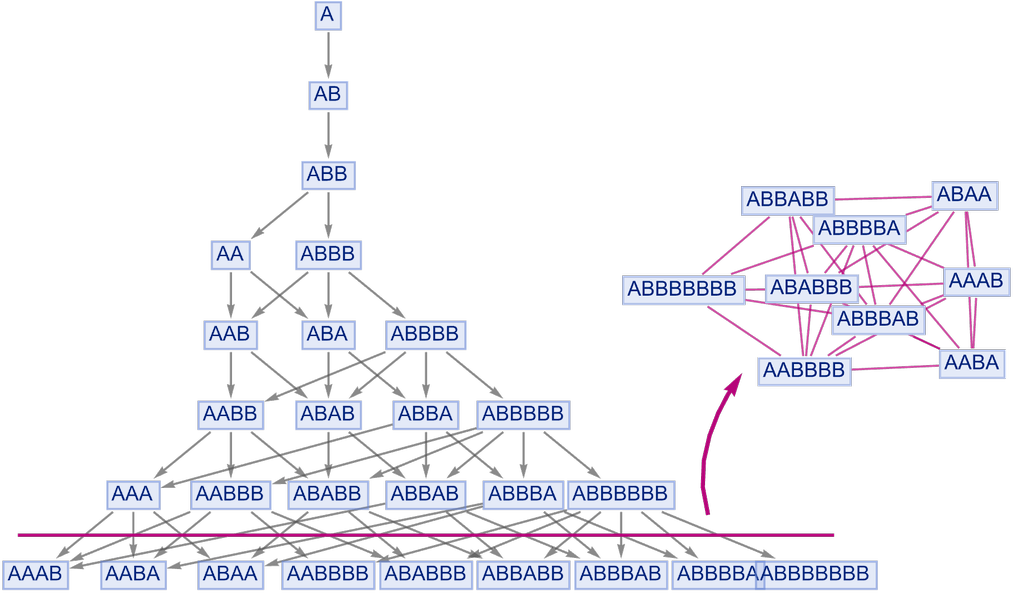

The framework of our Physics Project makes it natural to think of coarse-grained evolution as a multicomputational process—in which a given coarse-grained state has not just a single successor, but in general multiple possible successors. For the case we’re considering here, the multiway graph representing all possible evolution paths is then:

The branching here reflects a spreading out in coarse-grained state space, and an increase in coarse-grained entropy. If we continue longer—so that the system begins to “approach equilibrium”—we’ll start to see some merging as well

as a less “time-oriented” graph layout makes clear:

But the important point is that in its “approach to equilibrium” the system in effect rapidly “spreads out” in coarse-grained state space. Or, in other words, the number of possible states of the system consistent with a particular coarse-grained initial condition increases, corresponding to an increase in what one can consider to be the entropy of the system.

There are many possible ways to set up what we might view as “coarse graining”. An example of another possibility is to focus on the values of a particular block of cells, and then to ignore the values of all other cells. But it typically doesn’t take long for the effects of other cells to “seep into” the block we’re looking at:

So what is the bigger picture? The basic point is that insofar as the evolution of each individual microscopic state “leads to randomness”, it’ll tend to end up in a different “coarse-grained bin”. And the result is that even if one starts with a tightly defined coarse-grained description, it’ll inevitably tend to “spread out”, thereby encompassing more states and increasing the entropy.

In a sense, looking at entropy and coarse graining is just a less direct way to detect that a system tends to “produce effective randomness”. And while it may have seemed like a convenient formalism when one was, for example, trying to tease things out from systems with continuous variables, it now feels like a rather indirect way to get at the core phenomenon of the Second Law.

It’s useful to understand a few more connections, however. Let’s say one’s trying to work out the average value of something (say particle density) in a system. What do we mean by “average”? One possibility is that we take an “ensemble” of possible states of the system, then find the average across these. But another possibility is that we instead look at the average across successive states in the evolution of the system. The “ergodic hypothesis” is that the ensemble average will be the same as the time average.

One way this would—at least eventually—be guaranteed is if the evolution of the system is ergodic, in the sense that it eventually visits all possible states. But as we saw above, this isn’t something that’s particularly plausible for most systems. But it also isn’t necessary. Because so long as the evolution of the system is “effectively random” enough, it’ll quickly “sample typical states”, and give essentially the same averages as one would get from sampling all possible states, but without having to laboriously visit all these states.

How does one tie all this down with rigorous, mathematical-style proofs? Well, it’s difficult. And in a first approximation not much progress has been made on this for more than a century. But having seen that the core phenomenon of the Second Law can be reduced to an essentially purely computational statement, we’re now in a position to examine this in a different—and I think ultimately very clarifying—way.

Why the Second Law Works

At its core the Second Law is essentially the statement that “things tend to get more random”. And in a sense the ultimate driver of this is the surprising phenomenon of computational irreducibility I identified in the 1980s—and the remarkable fact that even from simple initial conditions simple computational rules can generate behavior of great complexity. But there are definitely additional nuances to the story.

For example, we’ve seen that—particularly in a reversible system—it’s always in principle possible to set up initial conditions that will evolve to “magically produce” whatever “simple” configuration we want. And when we say that we generate “apparently random” states, our “analyzer of randomness” can’t go in and invert the computational process that generated the states. Similarly, when we talk about coarse-grained entropy and its increase, we’re assuming that we’re not inventing some elaborate coarse-graining procedure that’s specially set up to pick out collections of states with “special” behavior.

But there’s really just one principle that governs all these things: that whatever method we have to prepare or analyze states of a system is somehow computationally bounded. This isn’t as such a statement of physics. Rather, it’s a general statement about observers, or, more specifically, observers like us.

We could imagine some very detailed model for an observer, or for the experimental apparatus they use. But the key point is that the details don’t matter. Really all that matters is that the observer is computationally bounded. And it’s then the basic computational mismatch between the observer and the computational irreducibility of the underlying system that leads us to “experience” the Second Law.

At a theoretical level we can imagine an “alien observer”—or even an observer with technology from our own future—that would not have the same computational limitations. But the point is that insofar as we are interested in explaining our own current experience, and our own current scientific observations, what matters is the way we as observers are now, with all our computational boundedness. And it’s then the interplay between this computational boundedness, and the phenomenon of computational irreducibility, that leads to our basic experience of the Second Law.

At some level the Second Law is a story of the emergence of complexity. But it’s also a story of the emergence of simplicity. For the very statement that things go to a “completely random equilibrium” implies great simplification. Yes, if an observer could look at all the details they would see great complexity. But the point is that a computationally bounded observer necessarily can’t look at those details, and instead the features they identify have a certain simplicity.

And so it is, for example, that even though in a gas there are complicated underlying molecular motions, it’s still true that at an overall level a computationally bounded observer can meaningfully discuss the gas—and make predictions about its behavior—purely in terms of things like pressure and temperature that don’t probe the underlying details of molecular motions.

In the past one might have thought that anything like the Second Law must somehow be specific to systems made from things like interacting particles. But in fact the core phenomenon of the Second Law is much more general, and in a sense purely computational, depending only on the basic computational phenomenon of computational irreducibility, together with the fundamental computational boundedness of observers like us.

And given this generality it’s perhaps not surprising that the core phenomenon appears far beyond where anything like the Second Law has normally been considered. In particular, in our Physics Project it now emerges as fundamental to the structure of space itself—as well as to the phenomenon of quantum mechanics. For in our Physics Project we imagine that at the lowest level everything in our universe can be represented by some essentially computational structure, conveniently described as a hypergraph whose nodes are abstract “atoms of space”. This structure evolves by following rules, whose operation will typically show all sorts of computational irreducibility. But now the question is how observers like us will perceive all this. And the point is that through our limitations we inevitably come to various “aggregate” conclusions about what’s going on. It’s very much like with the gas laws and their broad applicability to systems involving different kinds of molecules. Except that now the emergent laws are about spacetime and correspond to the equations of general relativity.

But the basic intellectual structure is the same. Except that in the case of spacetime, there’s an additional complication. In thermodynamics, we can imagine that there’s a system we’re studying, and the observer is outside it, “looking in”. But when we’re thinking about spacetime, the observer is necessarily embedded within it. And it turns out that there’s then one additional feature of observers like us that’s important. Beyond the statement that we’re computationally bounded, it’s also important that we assume that we’re persistent in time. Yes, we’re made of different atoms of space at different moments. But somehow we assume that we have a coherent thread of experience. And this is crucial in deriving our familiar laws of physics.

We’ll talk more about it later, but in our Physics Project the same underlying setup is also what leads to the laws of quantum mechanics. Of course, quantum mechanics is notable for the apparent randomness associated with observations made in it. And what we’ll see later is that in the end the same core phenomenon responsible for randomness in the Second Law also appears to be what’s responsible for randomness in quantum mechanics.

The interplay between computational irreducibility and computational limitations of observers turns out to be a central phenomenon throughout the multicomputational paradigm and its many emerging applications. It’s core to the fact that observers can experience computationally reducible laws in all sorts of samplings of the ruliad. And in a sense all of this strengthens the story of the origins of the Second Law. Because it shows that what might have seemed like arbitrary features of observers are actually deep and general, transcending a vast range of areas and applications.

But even given the robustness of features of observers, we can still ask about the origins of the whole computational phenomenon that leads to the Second Law. Ultimately it begins with the Principle of Computational Equivalence, which asserts that systems whose behavior is not obviously simple will tend to be equivalent in their computational sophistication. The Principle of Computational Equivalence has many implications. One of them is computational irreducibility, associated with the fact that “analyzers” or “predictors” of a system cannot be expected to have any greater computational sophistication than the system itself, and so are reduced to just tracing each step in the evolution of a system to find out what it does.

Another implication of the Principle of Computational Equivalence is the ubiquity of computation universality. And this is something we can expect to see “underneath” the Second Law. Because we can expect that systems like the particle cellular automaton—or, for that matter, the hard-sphere gas—will be provably capable of universal computation. Already it’s easy to see that simple logic gates can be constructed from configurations of particles, but a full demonstration of computation universality will be considerably more elaborate. And while it’d be nice to have such a demonstration, there’s still more that’s needed to establish full computational irreducibility of the kind the Principle of Computational Equivalence implies.

As we’ve seen, there are a variety of “indicators” of the operation of the Second Law. Some are based on looking for randomness or compression in individual states. Others are based on computing coarse grainings and entropy measures. But with the computational interpretation of the Second Law we can expect to translate such indicators into questions in areas like computational complexity theory.

At some level we can think of the Second Law as being a consequence of the dynamics of a system so “encrypting” the initial conditions of a system that no computations available to an “observer” can feasibly “decrypt” it. And indeed as soon as one looks at “inverting” coarse-grained results one is immediately faced with fairly classic NP problems from computational complexity theory. (Establishing NP completeness in a particular case remains challenging, just like establishing computation universality.)

Textbook Thermodynamics

In our discussion here, we’ve treated the Second Law of thermodynamics primarily as an abstract computational phenomenon. But when thermodynamics was historically first being developed, the computational paradigm was still far in the future, and the only way to identify something like the Second Law was through its manifestations in terms of physical concepts like heat and temperature.

The First Law of thermodynamics asserted that heat was a form of energy, and that overall energy was conserved. The Second Law then tried to characterize the nature of the energy associated with heat. And a core idea was that this energy was somehow incoherently spread among a large number of separate microscopic components. But ultimately thermodynamics was always a story of energy.

But is energy really a core feature of thermodynamics or is it merely “scaffolding” relevant for its historical development and early practical applications? In the hard-sphere gas example that we started from above, there’s a pretty clear notion of energy. But quite soon we largely abstracted energy away. Though in our particle cellular automaton we do still have something somewhat analogous to energy conservation: we have conservation of the number of non-white cells.

In a traditional physical system like a gas, temperature gives the average energy per degree of freedom. But in something like our particle cellular automaton, we’re effectively assuming that all particles always have the same energy—so there is for example no way to “change the temperature”. Or, put another way, what we might consider as the energy of the system is basically just given by the number of particles in the system.

Does this simplification affect the core phenomenon of the Second Law? No. That’s something much stronger, and quite independent of these details. But in the effort to make contact with recognizable “textbook thermodynamics”, it’s useful to consider how we’d add in ideas like heat and temperature.

In our discussion of the Second Law, we’ve identified entropy with the log of the number states consistent with a constraint. But more traditional thermodynamics involves formulas like

When the Second Law was first introduced, there were several formulations given, all initially referencing energy. One formulation stated that “heat does not spontaneously go from a colder body to a hotter”. And even in our particle cellular automaton we can see a fairly direct version of this. Our proxy for “temperature” is density of particles. And what we observe is that an initial region of higher density tends to “diffuse” out:

Another formulation of the Second Law talks about the impossibility of systematically “turning heat into mechanical work”. At a computational level, the analog of “mechanical work” is systematic, predictable behavior. So what this is saying is again that systems tend to generate randomness, and to “remove predictability”.

In a sense this is a direct reflection of computational irreducibility. To get something that one can “harness as mechanical work” one needs something that one can readily predict. But the whole point is that the presence of computational irreducibility makes prediction take an irreducible amount of computational work—that is beyond the capabilities of an “observer like us”.

Closely related is the statement that it’s not possible to make a perpetual motion machine (“of the second kind”, i.e. violating the Second Law), that continually “makes systematic motion” from “heat”. In our computational setting this would be like extracting a systematic, predictable sequence of bits from our particle cellular automaton, or from something like rule 30. And, yes, if we had a device that could for example systematically predict rule 30, then it would be straightforward, say, “just to pick out black cells”, and effectively to derive a predictable sequence. But computational irreducibility implies that we won’t be able to do this, without effectively just directly reproducing what rule 30 does, which an “observer like us” doesn’t have the computational capability to do.

Much of the textbook discussion of thermodynamics is centered around the assumption of “equilibrium”—or something infinitesimally close to it—in which one assumes that a system behaves “uniformly and randomly”. Indeed, the Zeroth Law of thermodynamics is essentially the statement that “statistically unique” equilibrium can be achieved, which in terms of energy becomes a statement that there is a unique notion of temperature.

Once one has the idea of “equilibrium”, one can then start to think of its properties as purely being functions of certain parameters—and this opens up all sorts of calculus-based mathematical opportunities. That anything like this makes sense depends, however, yet again on “perfect randomness as far as the observer is concerned”. Because if the observer could notice a difference between different configurations, it wouldn’t be possible to treat all of them as just being “in the equilibrium state”.

Needless to say, while the intuition of all this is made rather clear by our computational view, there are details to be filled in when it comes to any particular mathematical formulation of features of thermodynamics. As one example, let’s consider a core result of traditional thermodynamics: the Maxwell–Boltzmann exponential distribution of energies for individual particles or other degrees of freedom.

To set up a discussion of this, we need to have a system where there can be many possible microscopic amounts of energy, say, associated with some kind of idealized particles. Then we imagine that in “collisions” between such particles energy is exchanged, but the total is always conserved. And the question is how energy will eventually be distributed among the particles.

As a first example, let’s imagine that we have a collection of particles which evolve in a series of steps, and that at each step particles are paired up at random to “collide”. And, further, let’s assume that the effect of the collision is to randomly redistribute energy between the particles, say with a uniform distribution.

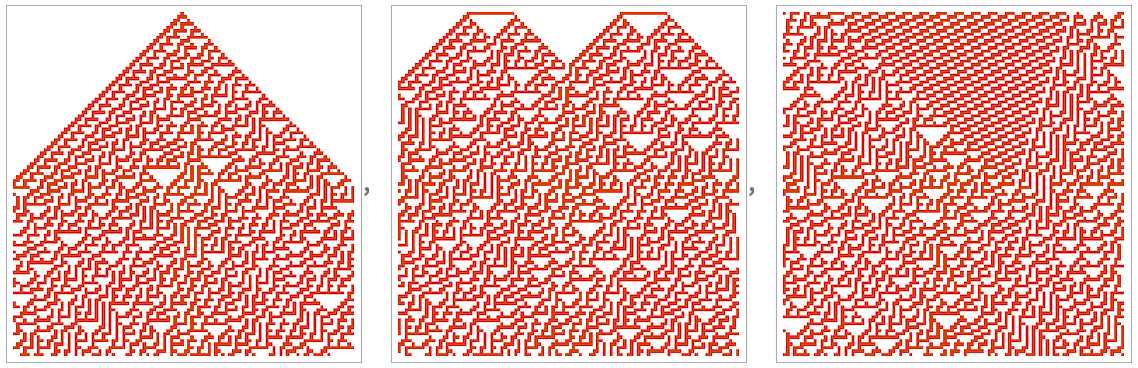

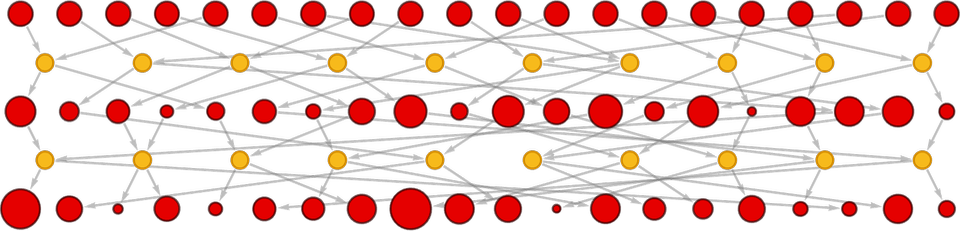

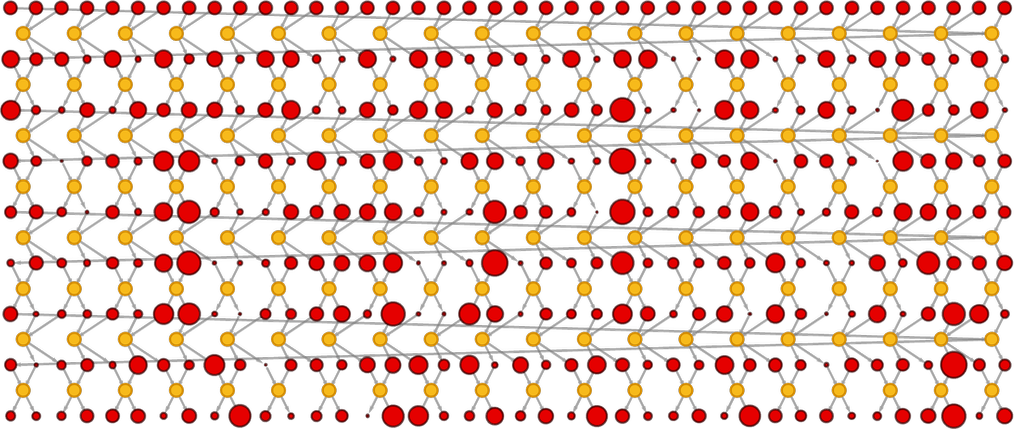

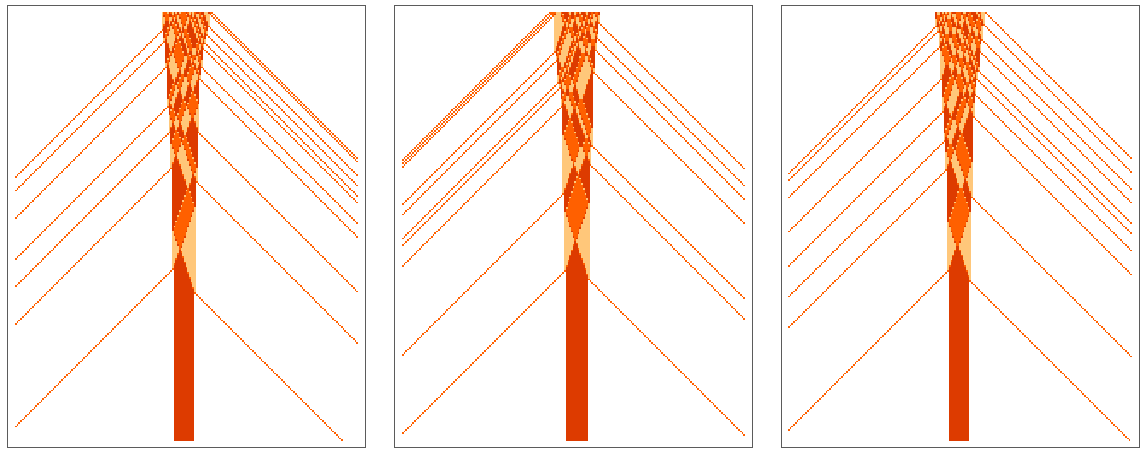

We can represent this process using a token-event graph, where the events (indicated here in yellow) are the collisions, and the tokens (indicated here in red) represent states of particles at each step. The energy of the particles is indicated here by the size of the “token dots”:

Continuing this a few more steps we get:

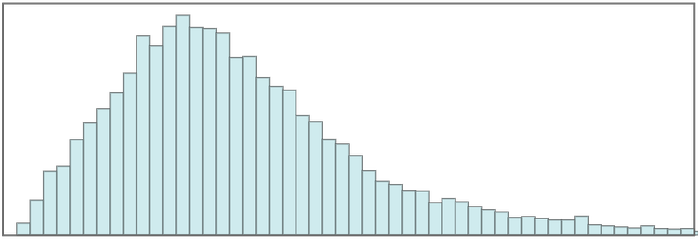

At the beginning we started with all particles having equal energies. But after a number of steps the particles have a distribution of energies—and the distribution turns out to be accurately exponential, just like the standard Maxwell–Boltzmann distribution:

If we look at the distribution on successive steps we see rapid evolution to the exponential form:

Why we end up with an exponential is not hard to see. In the limit of enough particles and enough collisions, one can imagine approximating everything purely in terms of probabilities (as one does in deriving Boltzmann transport equations, basic SIR models in epidemiology, etc.) Then if the probability for a particle to have energy E is ƒ(E), in every collision once the system has “reached equilibrium” one must have ƒ(E1)ƒ(E2) = ƒ(E3)ƒ(E4) where E1 + E2 = E3 + E4—and the only solution to this is ƒ(E) ∼ e–β E.

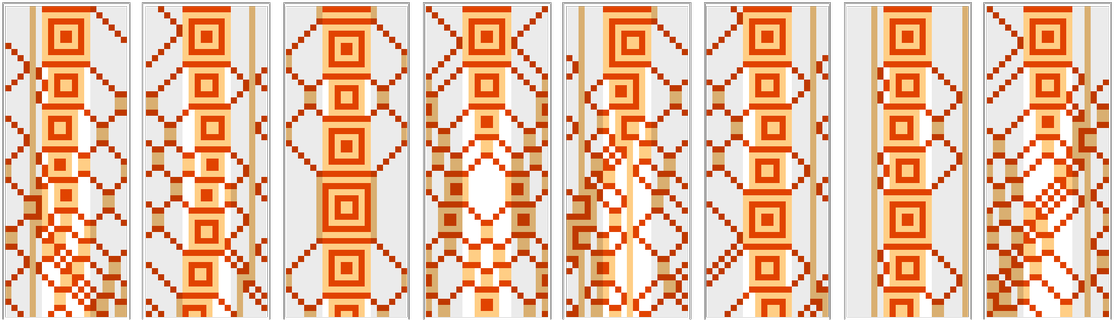

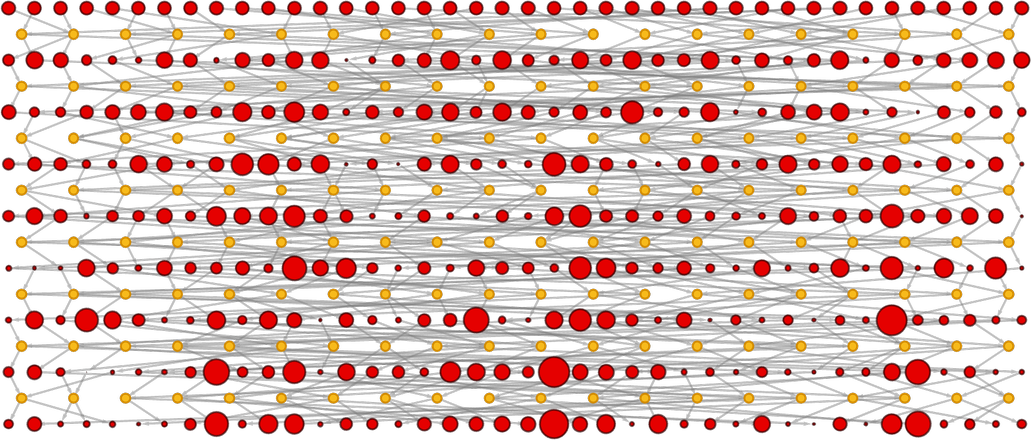

In the example we’ve just given, there’s in effect “immediate mixing” between all particles. But what if we set things up more like in a cellular automaton—with particles only colliding with their local neighbors in space? As an example, let’s say we have our particles arranged on a line, with alternating pairs colliding at each step in analogy to a block cellular automaton (the long-range connections represent wraparound of our lattice):

In the picture above we’ve assumed that in each collision energy is randomly redistributed between the particles. And with this assumption it turns out that we again rapidly evolve to an exponential energy distribution:

But now that we have a spatial structure, we can display what’s going on in more of a cellular automaton style—where here we’re showing results for 3 different sequences of random energy exchanges:

And once again, if we run long enough, we eventually get an exponential energy distribution for the particles. But note that the setup here is very different from something like rule 30—because we’re continuously injecting randomness from the outside into the system. And as a minimal way to avoid this, consider a model where at each collision the particles get fixed fractions

Here’s what happens with energy concentrated into a few particles

and with random initial energies:

And in all cases the system eventually evolves to a “pure checkerboard” in which the only particle energies are (1 – α)/2 and (1 + α)/2. (For α = 0 the system corresponds to a discrete version of the diffusion equation.) But if we look at the structure of the system, we can think of it as a continuous block cellular automaton. And as with other cellular automata, there are lots of possible rules that don’t lead to such simple behavior.

In fact, all we need do is allow α to depend on the energies E1 and E2 of colliding pairs of particles (or, here, the values of cells in each block). As an example, let’s take

And with this setup we once again often see “rule-30-like behavior” in which effectively quite random behavior is generated even without any explicit injection of randomness from outside (the lower panels start at step 1000):

The underlying construction of the rule ensures that total energy is conserved. But what we see is that the evolution of the system distributes it across many elements. And at least if we use random initial conditions

we eventually in all cases see an exponential distribution of energy values (with simple initial conditions it can be more complicated):

The evolution towards this is very much the same as in the systems above. In a sense it depends only on having a suitably randomized energy-conserving collision process, and it takes only a few steps to go from a uniform initial distribution energy to an accurately exponential one:

So how does this all work in a “physically realistic” hard-sphere gas? Once again we can create a token-event graph, where the events are collisions, and the tokens correspond to periods of free motion of particles. For a simple 1D “Newton’s cradle” configuration, there is an obvious correspondence between the evolution in “spacetime”, and the token-event graph:

But we can do exactly the same thing for a 2D configuration. Indicating the energies of particles by the sizes of tokens we get (excluding wall collisions, which don’t affect particle energy)

where the “filmstrip” at the side gives snapshots of the evolution of the system. (Note that in this system, unlike the ones above, there aren’t definite “steps” of evolution; the collisions just happen “asynchronously” at times determined by the dynamics.)

In the initial condition we’re using here, all particles have the same energy. But when we run the system we find that the energy distribution for the particles rapidly evolves to the standard exponential form (though note that here successive panels are “snapshots”, not “steps”):

And because we’re dealing with “actual particles”, we can look not only at their energies, but also at their speeds (related simply by E = 1/2 m v2). When we look at the distribution of speeds generated by the evolution, we find that it has the classic Maxwellian form:

And it’s this kind of final or “equilibrium” result that’s what’s mainly discussed in typical textbooks of thermodynamics. Such books also tend to talk about things like tradeoffs between energy and entropy, and define things like the (Helmholtz) free energy F = U – T S (where U is internal energy, T is temperature and S is entropy) that are used in answering questions like whether particular chemical reactions will occur under certain conditions.

But given our discussion of energy here, and our earlier discussion of entropy, it’s at first quite unclear how these quantities might relate, and how they can trade off against each other, say in the formula for free energy. But in some sense what connects energy to the standard definition of entropy in terms of the logarithm of the number of states is the Maxwell–Boltzmann distribution, with its exponential form. In the usual physical setup, the Maxwell–Boltzmann distribution is basically e(–E/kT), where T is the temperature, and kT is the average energy.

But now imagine we’re trying to figure out whether some process—say a chemical reaction—will happen. If there’s an energy barrier, say associated with an energy difference Δ, then according to the Maxwell–Boltzmann distribution there’ll be a probability proportional to e(–Δ/kT) for molecules to have a high enough energy to surmount that barrier. But the next question is how many configurations of molecules there are in which molecules will “try to surmount the barrier”. And that’s where the entropy comes in. Because if the number of possible configurations is Ω, the entropy S is given by k log Ω, so that in terms of S, Ω = e(S/k). But now the “average number of molecules which will surmount the barrier” is roughly given by

This argument is quite rough, but it captures the essence of what’s going on. And at first it might seem like a remarkable coincidence that there’s a logarithm in the definition of entropy that just “conveniently fits together” like this with the exponential in the Maxwell–Boltzmann distribution. But it’s actually not a coincidence at all. The point is that what’s really fundamental is the concept of counting the number of possible states of a system. But typically this number is extremely large. And we need some way to “tame” it. We could in principle use some slow-growing function other than log to do this. But if we use log (as in the standard definition of entropy) we precisely get the tradeoff with energy in the Maxwell–Boltzmann distribution.

There is also another convenient feature of using log. If two systems are independent, one with Ω1 states, and the other with Ω2 states, then a system that combines these (without interaction) will have Ω1, Ω2 states. And if S = k log Ω, then this means that the entropy of the combined state will just be the sum S1 + S2 of the entropies of the individual states. But is this fact actually “fundamentally independent” of the exponential character of the Maxwell–Boltzmann distribution? Well, no. Or at least it comes from the same mathematical idea. Because it’s the fact that in equilibrium the probability ƒ(E) is supposed to satisfy ƒ(E1)ƒ(E2) = ƒ(E3)ƒ(E4) when E1 + E2 = E3 + E4 that makes ƒ(E) have its exponential form. In other words, both stories are about exponentials being able to connect additive combination of one quantity with multiplicative combination of another.

Having said all this, though, it’s important to understand that you don’t need energy to talk about entropy. The concept of entropy, as we’ve discussed, is ultimately a computational concept, quite independent of physical notions like energy. In many textbook treatments of thermodynamics, energy and entropy are in some sense put on a similar footing. The First Law is about energy. The Second Law is about entropy. But what we’ve seen here is that energy is really a concept at a different level from entropy: it’s something one gets to “layer on” in discussing physical systems, but it’s not a necessary part of the “computational essence” of how things work.

(As an extra wrinkle, in the case of our Physics Project—as to some extent in traditional general relativity and quantum mechanics—there are some fundamental connections between energy and entropy. In particular—related to what we’ll discuss below—the number of possible discrete configurations of spacetime is inevitably related to the “density” of events, which defines energy.)

Towards a Formal Proof of the Second Law

It would be nice to be able to say, for example, that “using computation theory, we can prove the Second Law”. But it isn’t as simple as that. Not least because, as we’ve seen, the validity of the Second Law depends on things like what “observers like us” are capable of. But we can, for example, formulate what the outline of a proof of the Second Law could be like, though to give a full formal proof we’d have to introduce a variety of “axioms” (essentially about observers) that don’t have immediate foundations in existing areas of mathematics, physics or computation theory.

The basic idea is that one imagines a state S of a system (which could just be a sequence of values for cells in something like a cellular automaton). One considers an “observer function” Θ which, when applied to the state S, gives a “summary” of S. (A very simple example would be the run-length encoding that we used above.) Now we imagine some “evolution function” Ξ that is applied to S. The basic claim of the Second Law is that the “sizes” normally satisfy the inequality Θ[Ξ[S]] ≥ Θ[S], or in other words, that “compression by the observer” is less effective after the evolution of system, in effect because the state of the system has “become more random”, as our informal statement of the Second Law suggests.

What are the possible forms of Θ and Ξ? It’s slightly easier to talk about Ξ, because we imagine that this is basically any not-obviously-trivial computation, run for an increasing number of steps. It could be repeated application of a cellular automaton rule, or a Turing machine, or any other computational system. We might represent an individual step by an operator ξ, and say that in effect Ξ = ξt. We can always construct ξt by explicitly applying ξ successively t times. But the question of computational irreducibility is whether there’s a shortcut way to get to the same result. And given any specific representation of ξt (say, rather prosaically, as a Boolean circuit), we can ask how the size of that representation grows with t.

With the current state of computation theory, it’s exceptionally difficult to get definitive general results about minimal sizes of ξt, though in sufficiently small cases it’s possible to determine this “experimentally”, essentially by exhaustive search. But there’s an increasing amount of at least circumstantial evidence that for many kinds of systems, one can’t do much better than explicitly constructing ξt, as the phenomenon of computational irreducibility suggests. (One can imagine “toy models”, in which ξ corresponds to some very simple computational process—like a finite automaton—but while this likely allows one to prove things, it’s not at all clear how useful or representative any of the results will be.)

OK, so what about the “observer function” Θ? For this we need some kind of “observer theory”, that characterizes what observers—or, at least “observers like us”—can do, in the same kind of way that standard computation theory characterizes what computational systems can do. There are clearly some features Θ must have. For example, it can’t involve unbounded amounts of computation. But realistically there’s more than that. Somehow the role of observers is to take all the details that might exist in the “outside world”, and reduce or compress these to some “smaller” representation that can “fit in the mind of the observer”, and allow the observer to “make decisions” that abstract from the details of the outside world whatever specifics the observer “cares about”. And—like a construction such as a Turing machine—one must in the end have some way of building up “possible observers” from something like basic primitives.